本系列联合作者 容器服务 @谢石

Service service for this series co-author

近几年,企业基础设施云原生化的趋势越来越强烈,从最开始的IaaS化到现在的微服务化,客户的颗粒度精细化和可观测性的需求更加强烈。容器网络为了满足客户更高性能和更高的密度,也一直在高速的发展和演进中,这必然对客户对云原生网络的可观测性带来了极高的门槛和挑战。为了提高云原生网络的可观测性,同时便于客户和前后线同学增加对业务链路的可读性,ACK产研和AES联合共建,合作开发ack net-exporter和云原生网络数据面可观测性系列,帮助客户和前后线同学了解云原生网络架构体系,简化对云原生网络的可观测性的门槛,优化客户运维和售后同学处理疑难问题的体验 ,提高云原生网络的链路的稳定性。

In recent years, corporate infrastructure has become more and more biochemical, from the beginning of the Iaas to the present micro-services, with a stronger demand for precision and observability of the client's particle content. The container network has also been developing and evolving at a high rate in order to meet higher performance and density of clients, which necessarily poses a very high threshold and challenge to customers' observability of cloud-based networks. In order to improve the observability of cloud-based networks, and to improve the readability of the business links between clients and front-and-back students, ACK and AES have jointly built, and cooperate in the development of the Jack net-exporter and cloud-based network data surfaces observable series, helping clients and forward-and-forward students to understand the network architecture of cloud-based networks, simplifying the threshold of observability of cloud-based networks, and optimizing the stability of the network's links in dealing with problematic problems after sales.

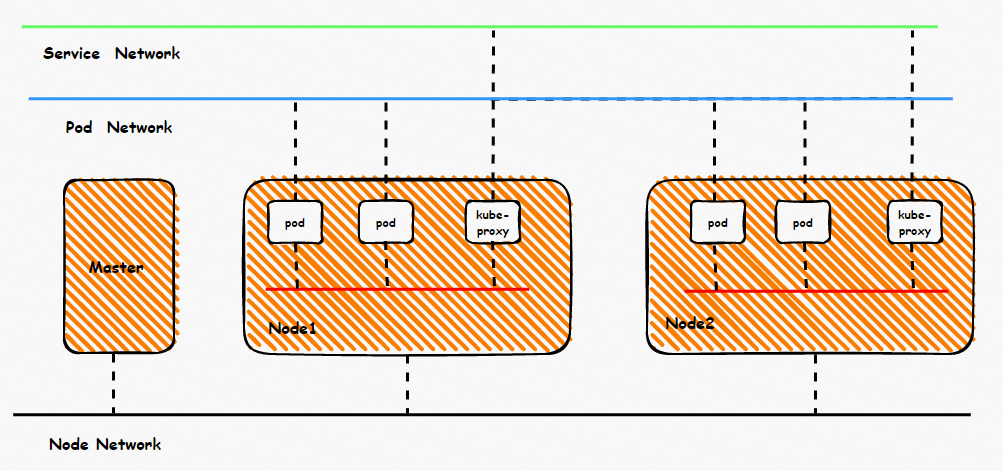

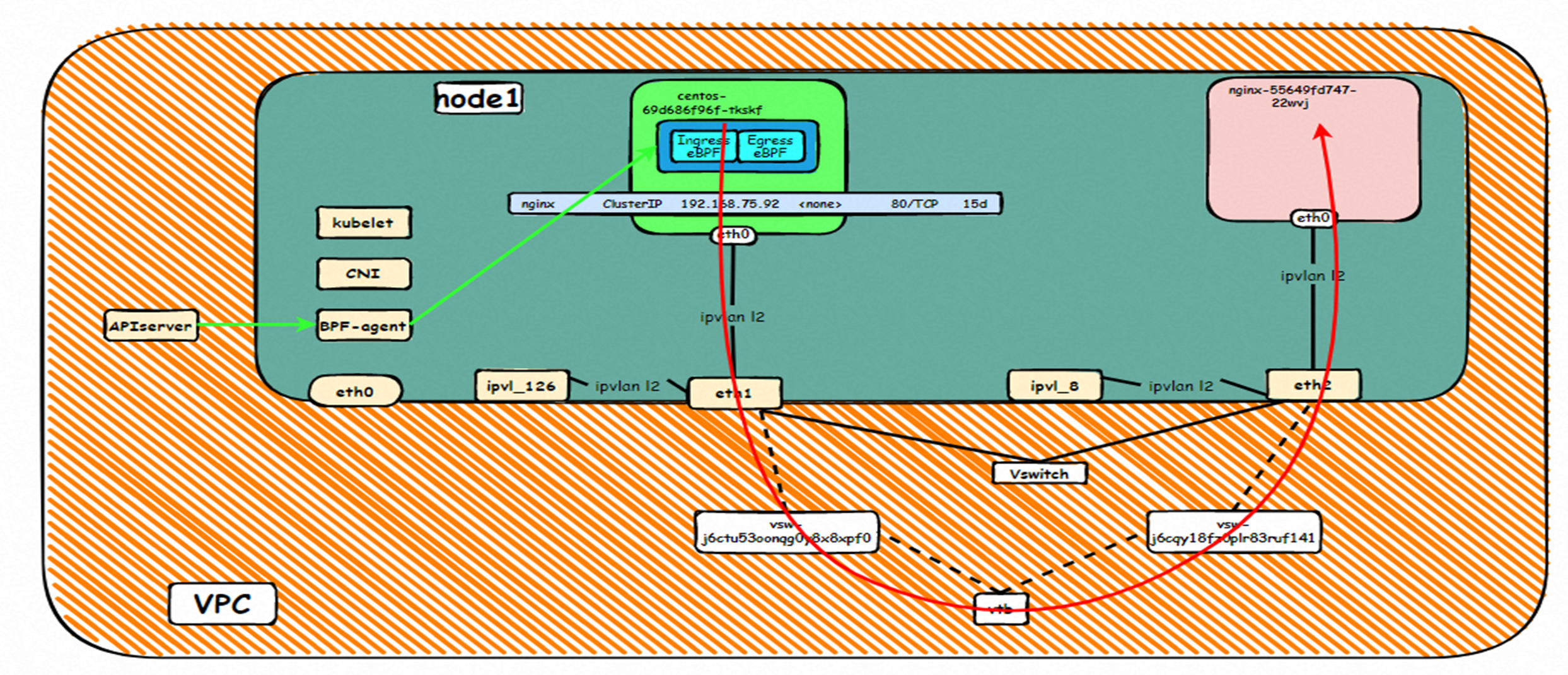

鸟瞰容器网络,整个容器网络可以分为三个部分:Pod网段,Service网段和Node网段。 这三个网络要实现互联互通和访问控制,那么实现的技术原理是什么?整个链路又是什么,限制又是什么呢?Flannel, Terway有啥区别?不同模式下网络性能如何?这些,需要客户在下搭建容器之前,就要依据自己的业务场景进行选择,而搭建完毕后,相关的架构又是无法转变,所以客户需要对每种架构特点要有充分了解。比如下图是个简图,Pod网络既要实现同一个ECS的Pod间的网络互通和控制,又要实现不同ECS Pod间的访问, Pod访问SVC 的后端可能在同一个ECS 也可能是其他ECS,这些在不同模式下,数据链转发模式是不同的,从业务侧表现结果也是不一样的。

The bird-eye container network can be divided into three parts: Pod section, Service section, and Node section. What is the technical rationale for these three networks to achieve connectivity and access control? What is the whole connection, and what are the limitations? What is the difference?

本文是[全景剖析容器网络数据链路]第四部分部分,主要介绍Kubernetes Terway EBPF+IPVLAN模式下,数据面链路的转转发链路,一是通过了解不同场景下的数据面转发链路,从而探知客户在不同的场景下访问结果表现的原因,帮助客户进一步优化业务架构;另一方面,通过深入了解转发链路,从而在遇到容器网络抖动时候,客户运维以及阿里云同学可以知道在哪些链路点进行部署观测手动,从而进一步定界问题方向和原因。

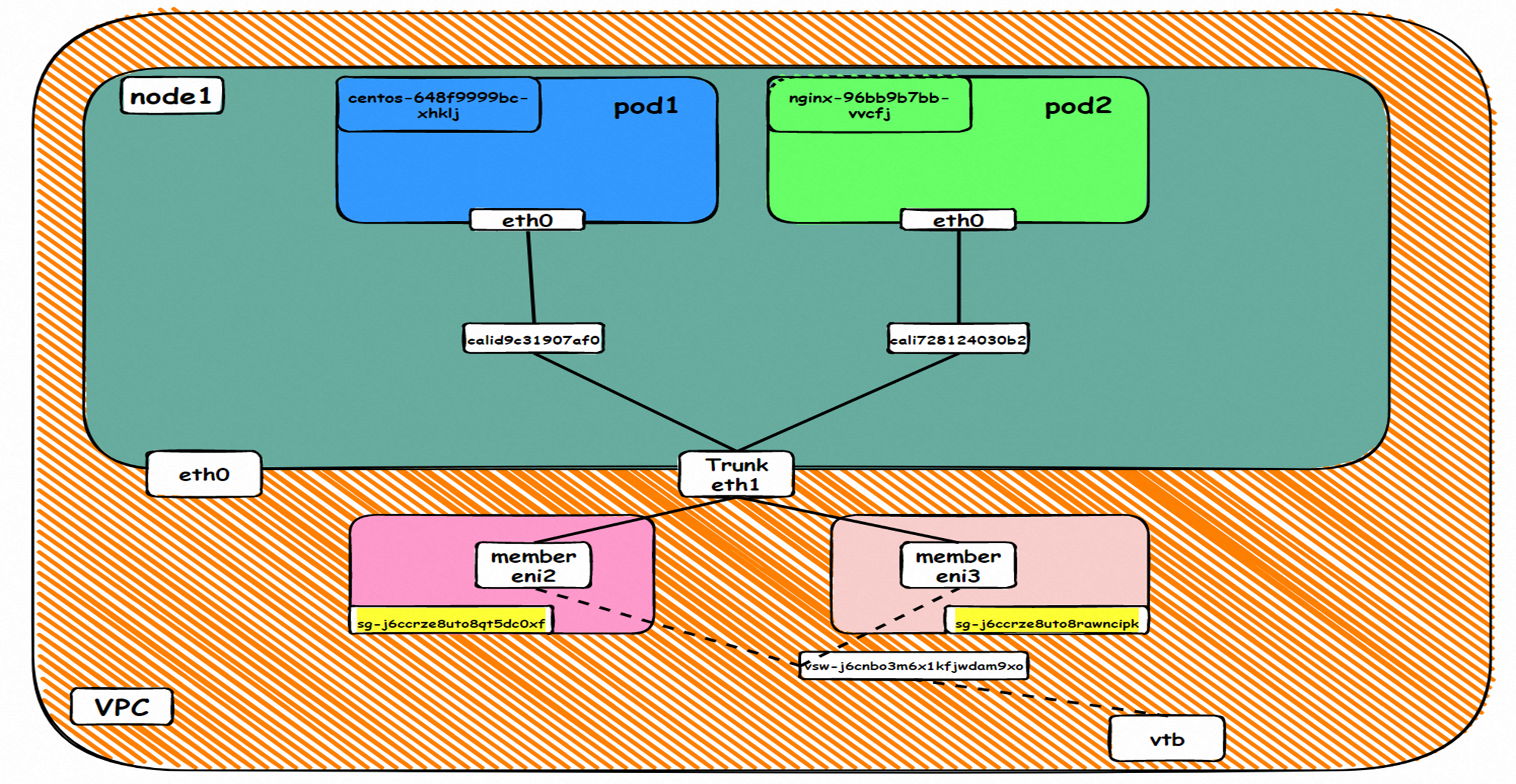

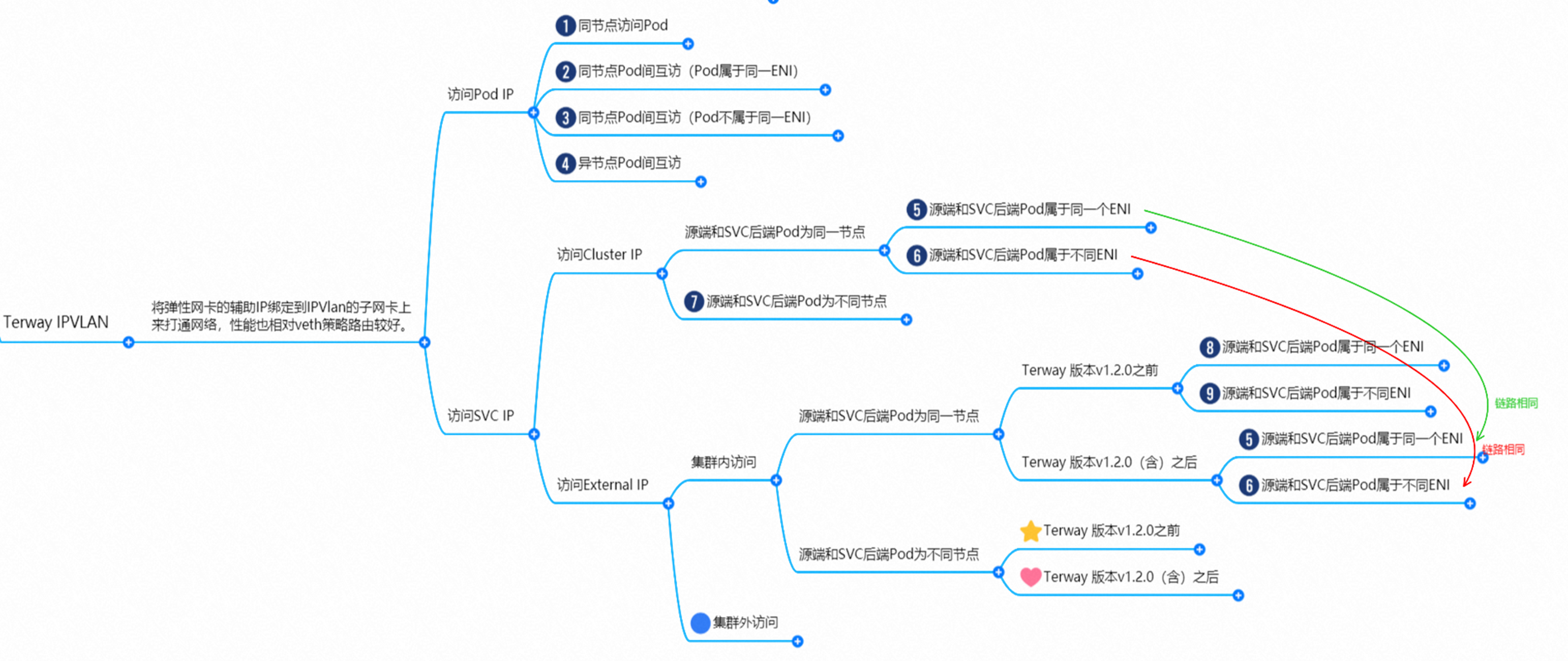

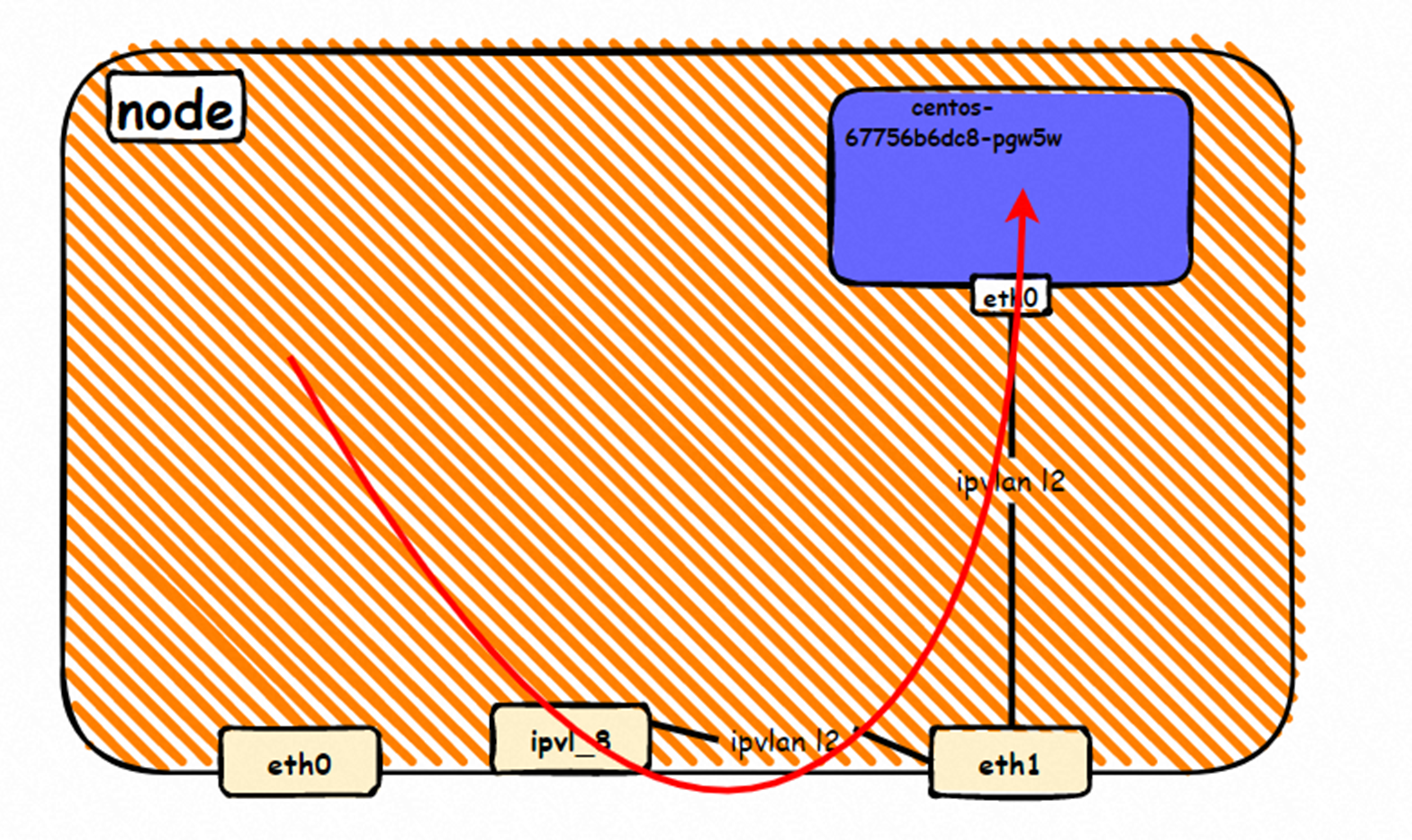

系列一:全景剖析阿里云容器网络数据链路(一)—— Flannel 系列二:全景剖析阿里云容器网络数据链路(二)—— Terway ENI 系列三:全景剖析阿里云容器网络数据链路(三)—— Terway ENIIP 系列四:全景剖析阿里云容器网络数据链路(四)—— Terway IPVLAN+EBPF 系列五:全景剖析阿里云容器网络数据链路(五)—— Terway ENI-Trunking 系列六:全景剖析阿里云容器网络数据链路(六)—— ASM Istio 弹性网卡(ENI)支持配置多个辅助IP的功能,单个弹性网卡(ENI)根据实例规格可以分配6-20个辅助IP,ENI多IP模式就是利用了这个辅助IP分配给容器,从而大幅提高了Pod部署的规模和密度。在网络联通的方式上,Terway支持选择Veth pair策略路由和ipvlan l两种方案,Linux在4.2以上的内核中支持了ipvlan的虚拟网络,可以实现单个网卡虚拟出来多个子网卡用不同的IP地址,而Terway便利用了这种虚拟网络类型,将弹性网卡的辅助IP绑定到IPVlan的子网卡上来打通网络,使用这种模式使ENI多IP的网络结构足够简单,性能也相对veth策略路由较好。 Flexible Netcard (ENI) supports the configuration of multiple support IPs, with individual flexnet cards (ENI) distributing 6-20 support IPs according to example specifications, using this support IP to the packaging, thereby significantly increasing the size and density of Pod deployments. In the way network is connected, Terway supports the selection of Veth pair policy paths by ipvlan l, Linux supports , using a virtual network-connected network with a virtual network with a different PV-connector-connected version of the Internet, using a virtual-net-net-based version of the PV-enabled virtual-net-nets. Pod 所使用的的CIDR网段和节点的CIDR是同一个网段 The CIDR section and node used by Pod is the same Pod内部可以看到是有一张网卡的,一个是eth0,其中eth0 的IP就是 Pod的IP,此网卡的MAC地址和控制台上的ENI的MAC地址不一致,同时ECS上有多张 ethx的网卡,说明ENI附属网卡并不是直接挂在到了Pod的网络命名空间 Pod has a netcard, one of which is eth0, of which eth0's IP is Pod's IP. The MAC address of the MAC's address and the ENI's address on the Console is inconsistent, while the ECS has several ethx's netcards, which indicate that the EN's satellite card is not directly attached to the Pod's network namespace Pod内有只有指向eth0的默认路由,说明Pod访问任何地址段都是从eth0为统一的出入口 Pod has only the default route to eth0,

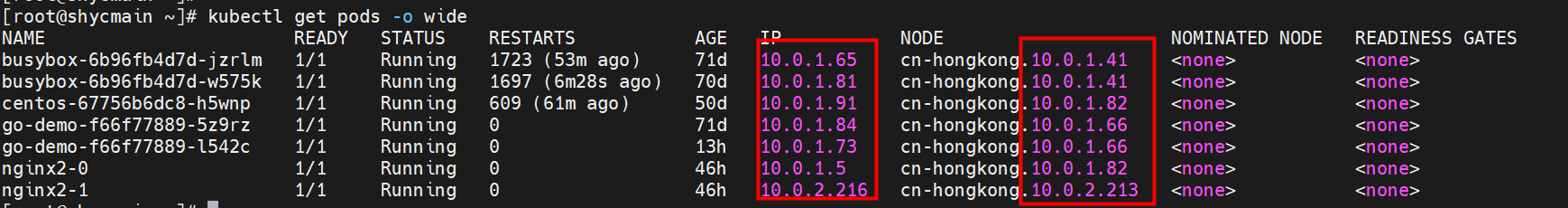

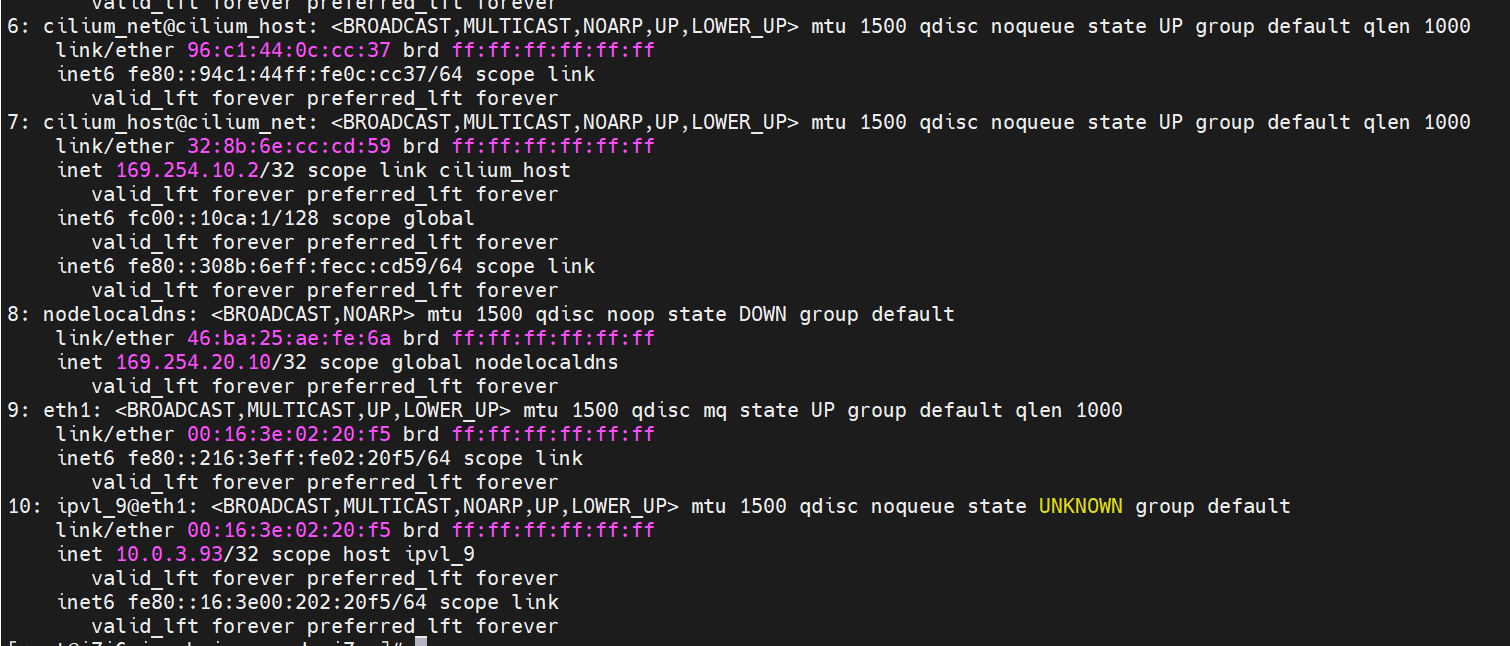

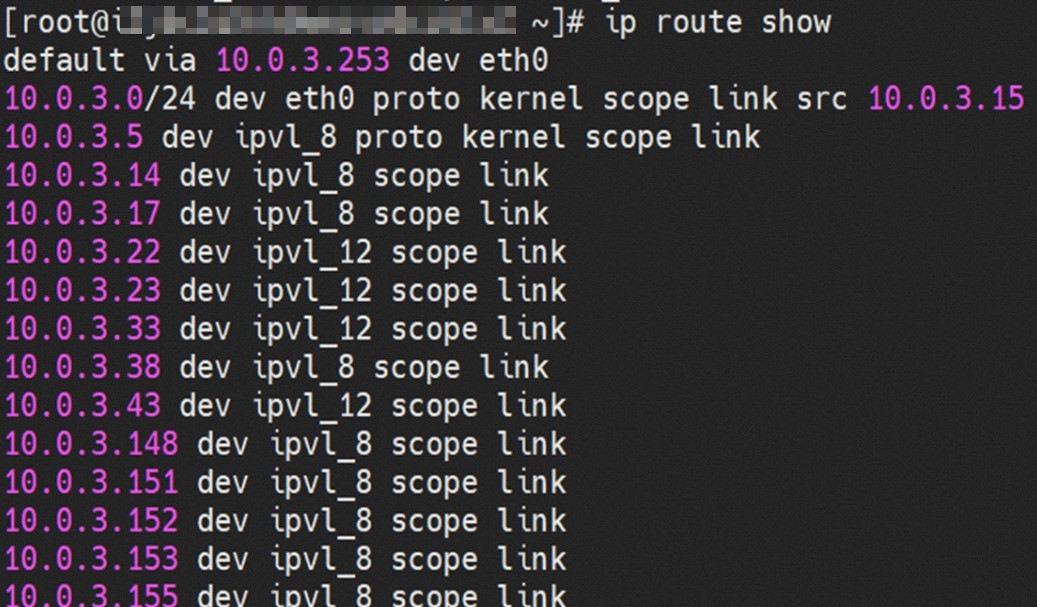

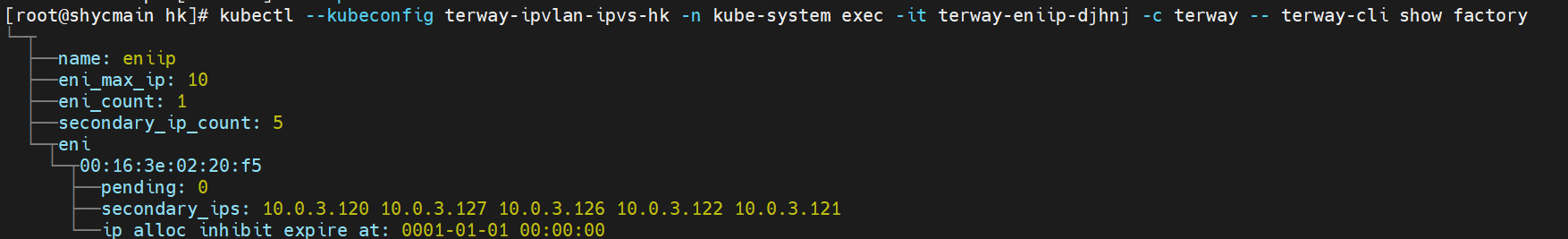

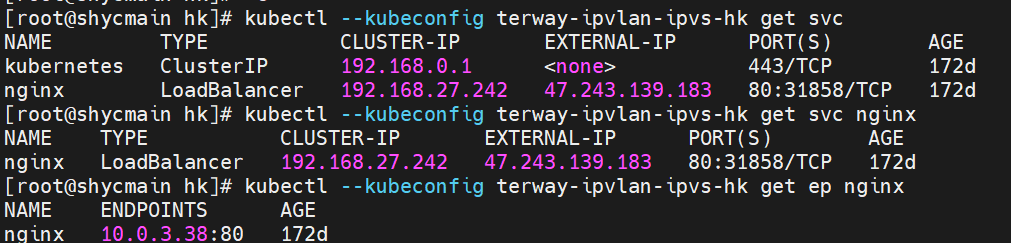

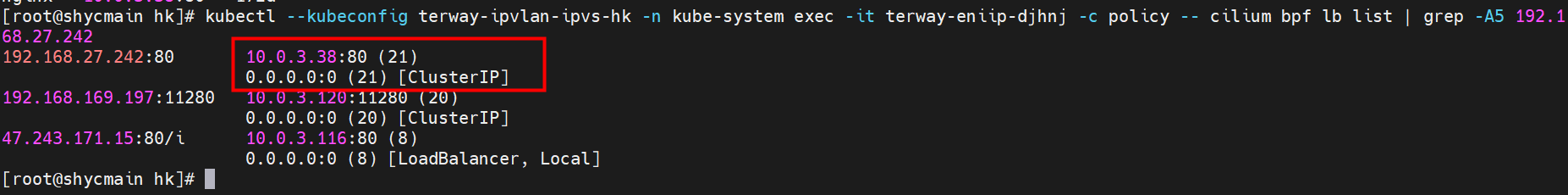

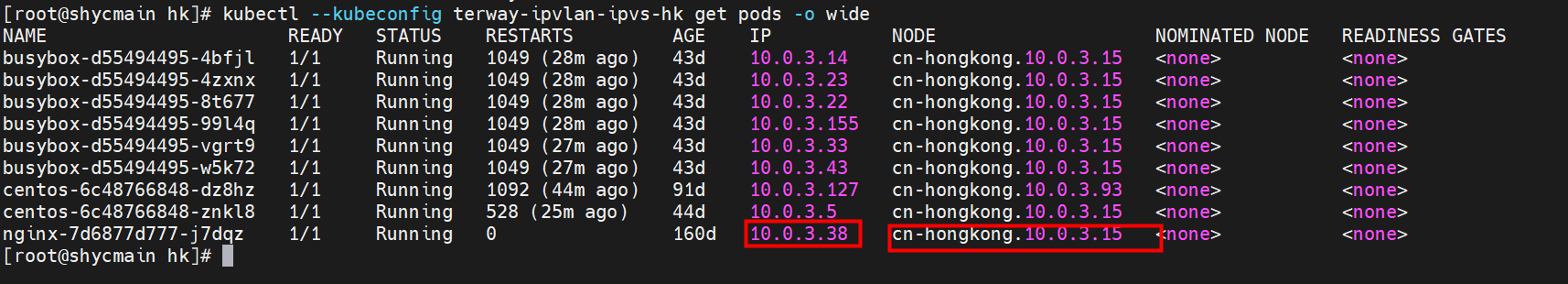

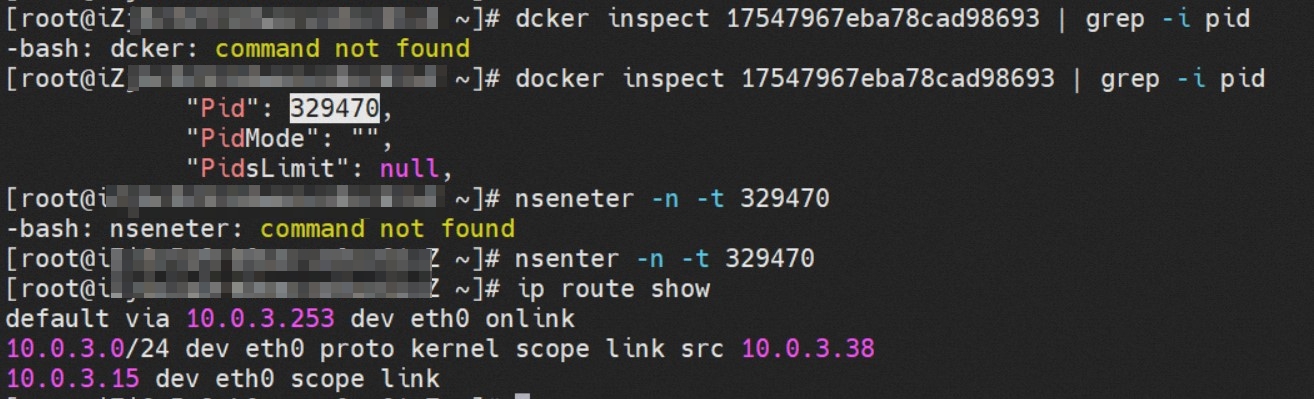

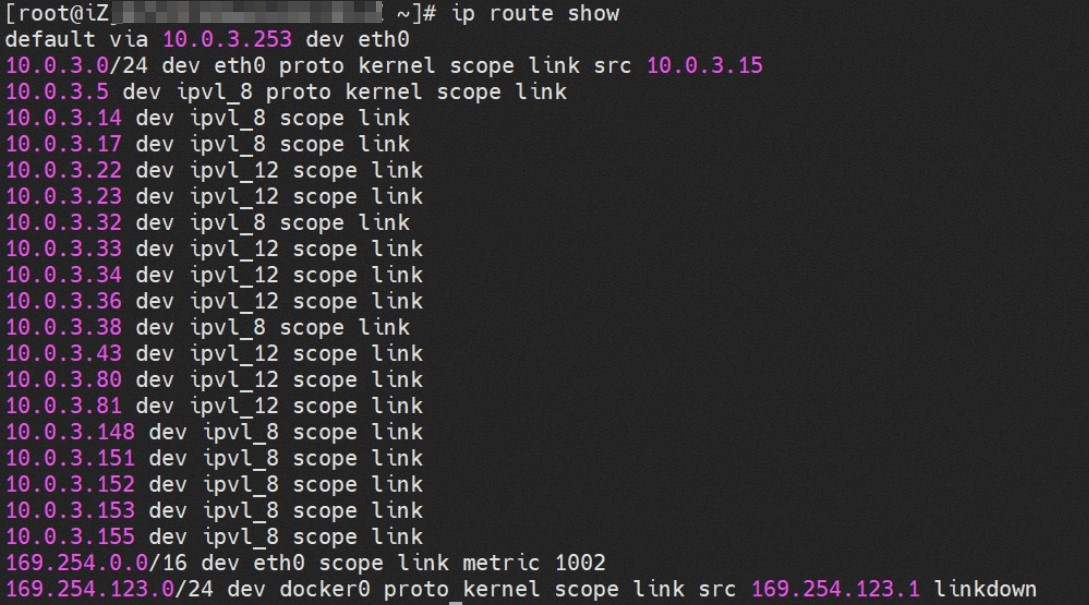

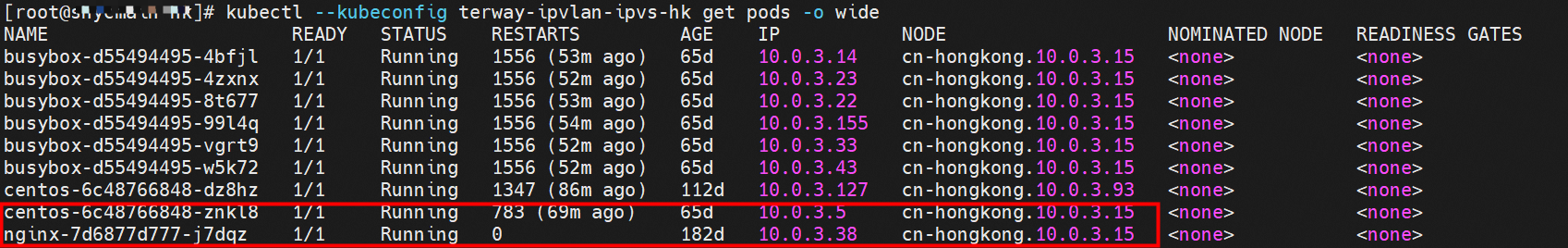

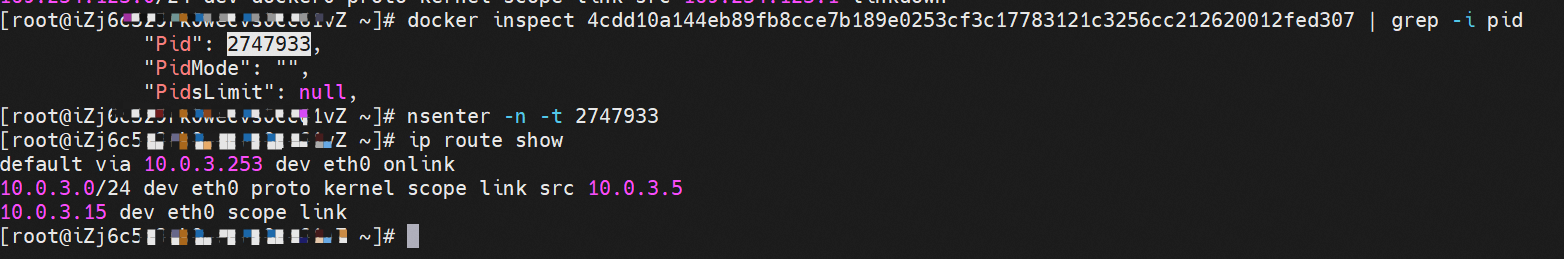

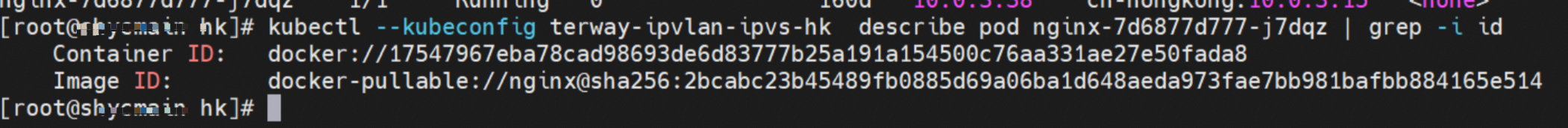

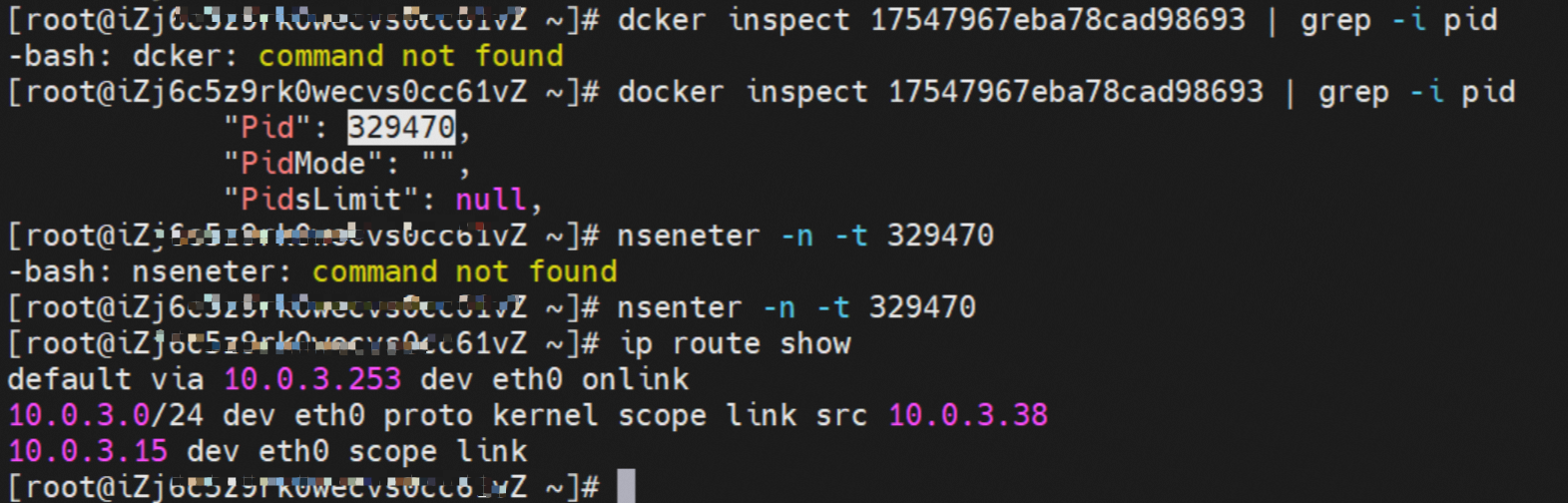

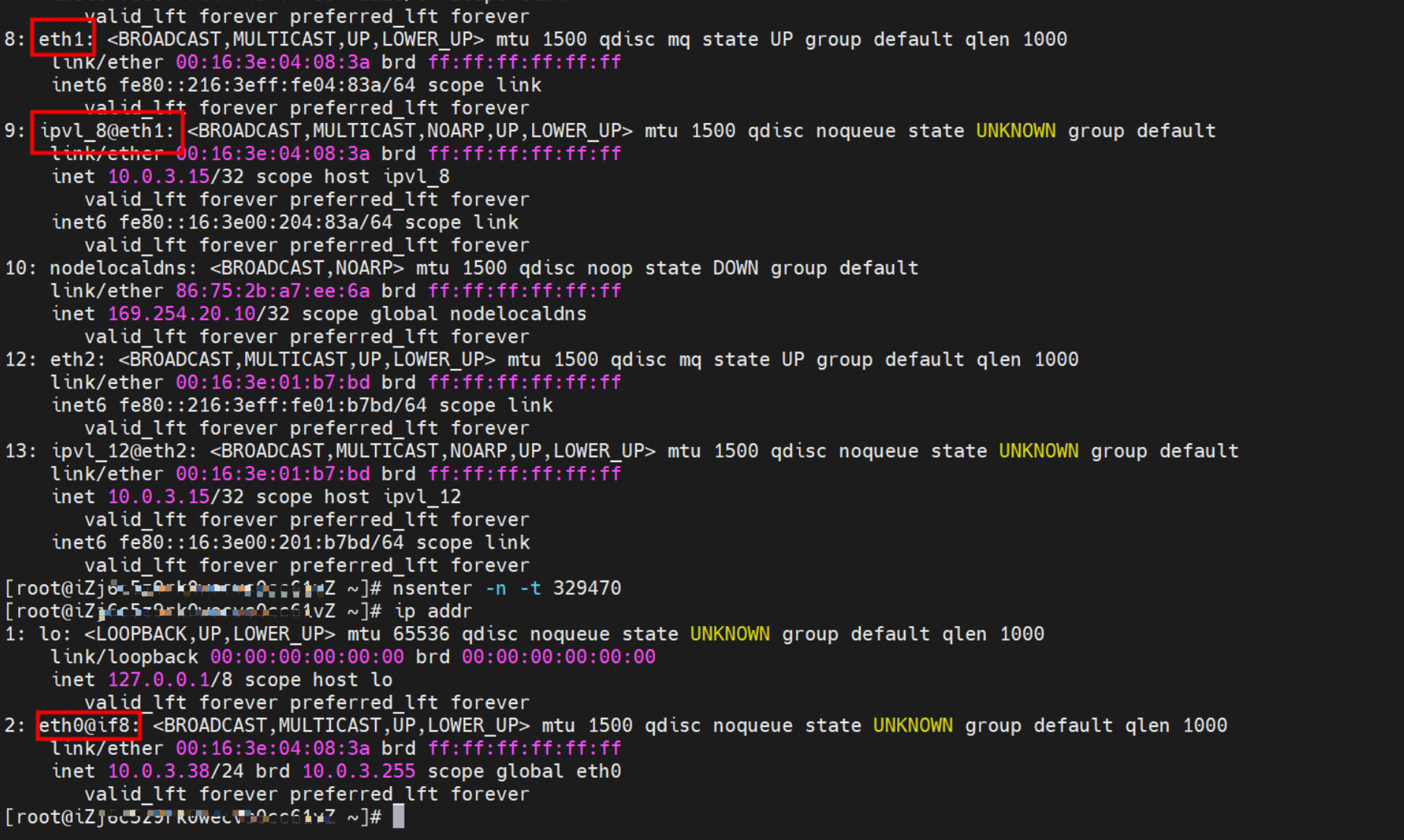

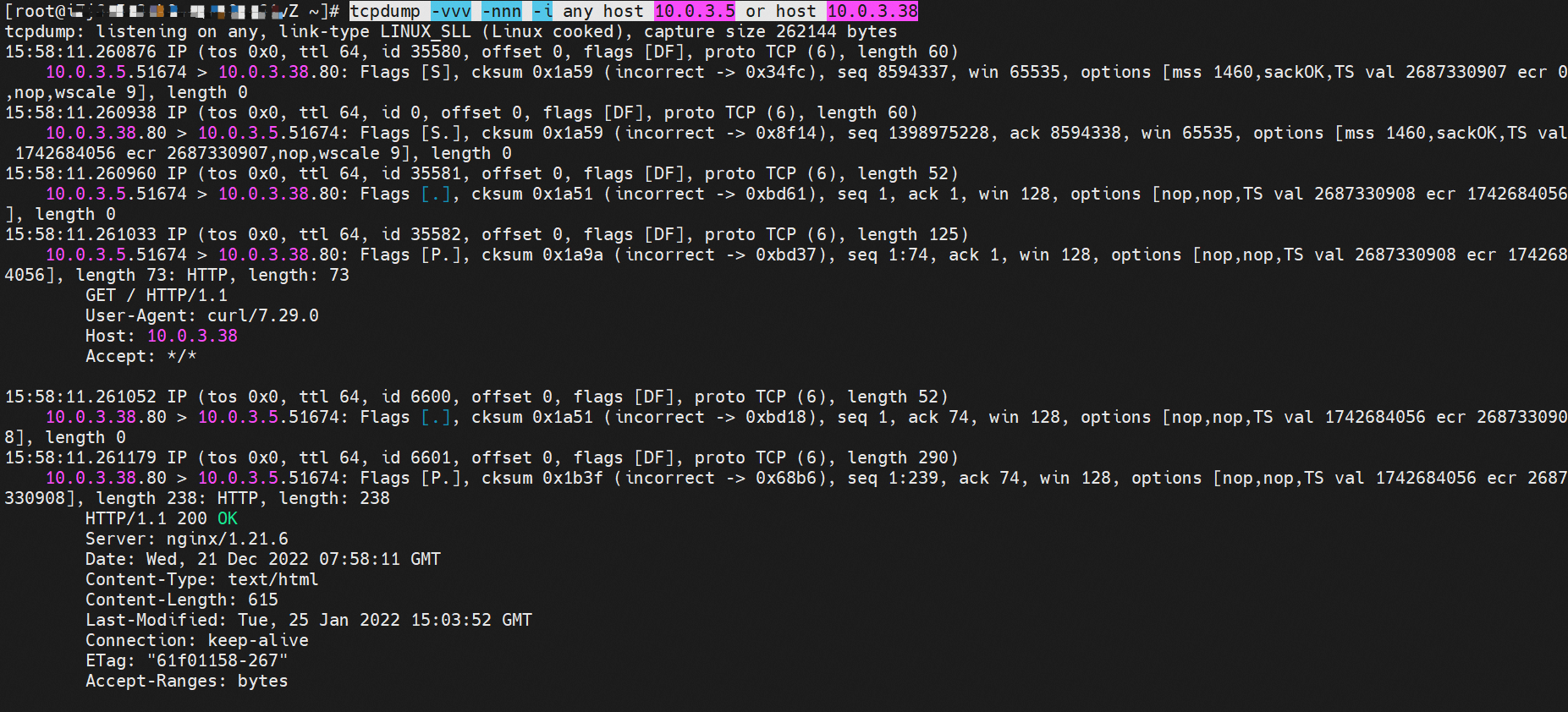

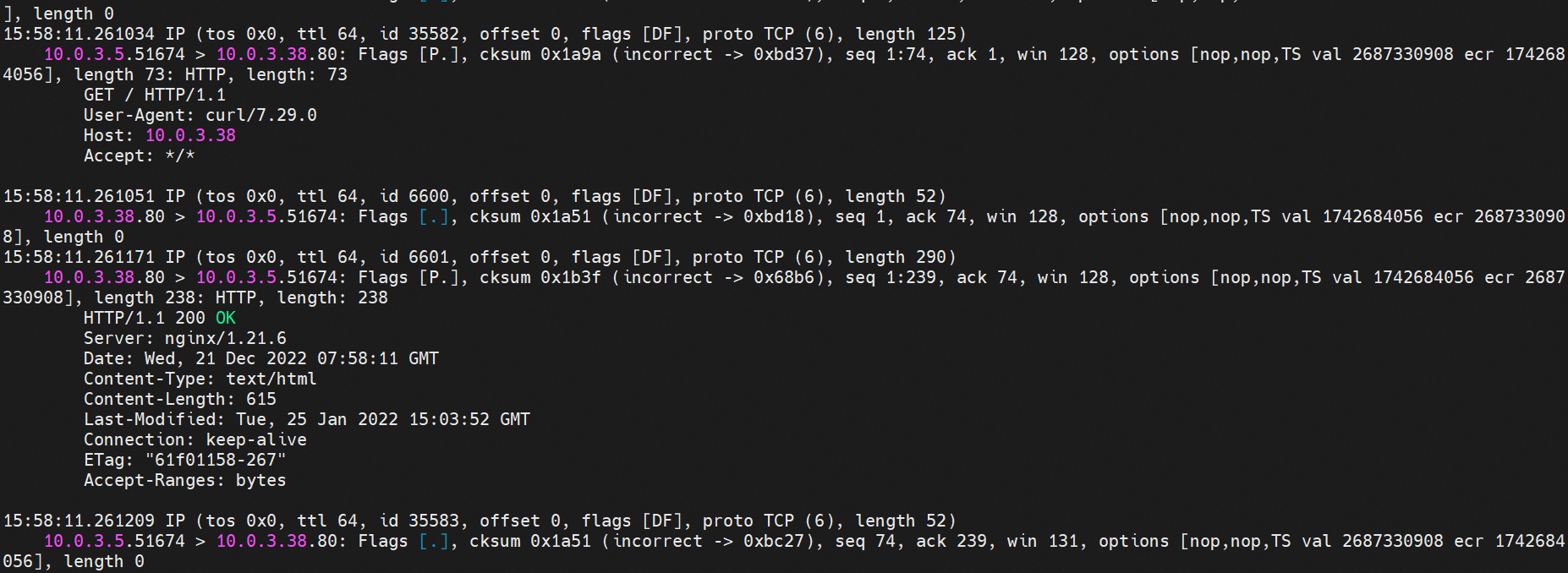

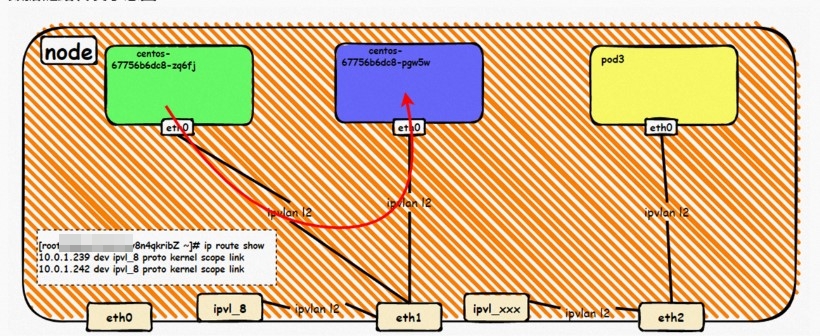

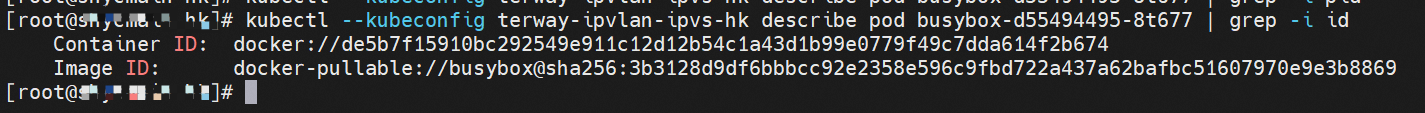

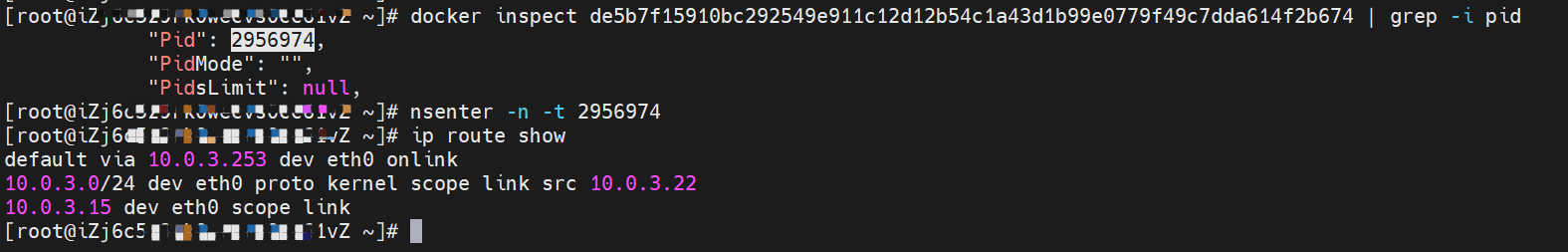

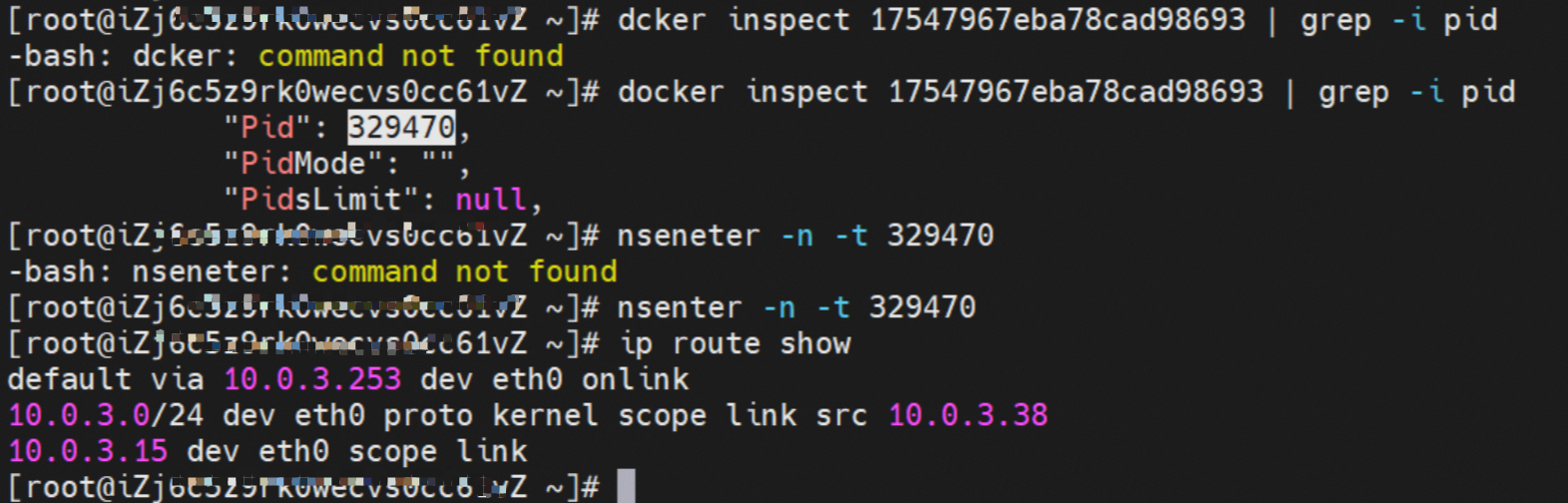

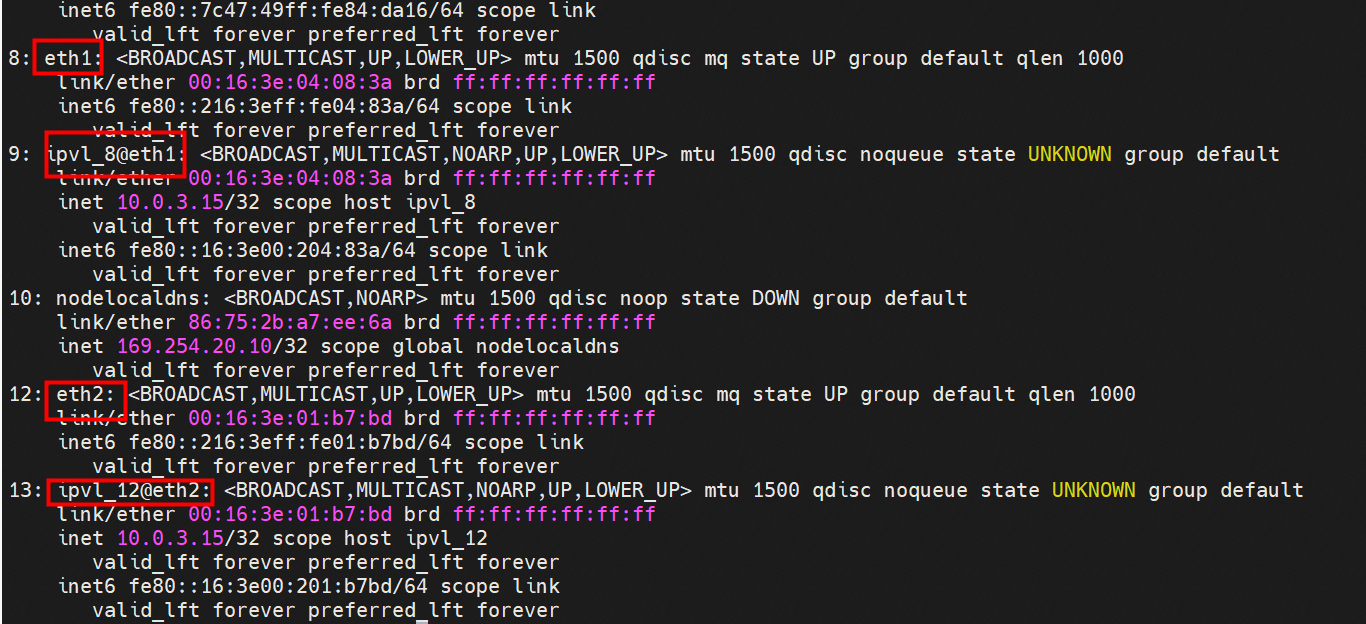

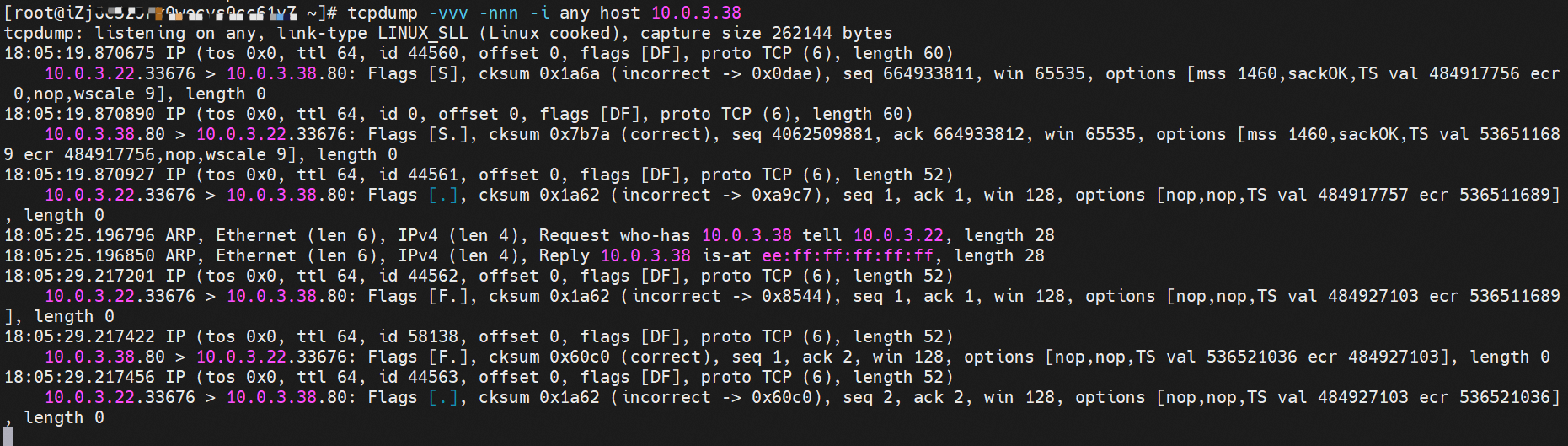

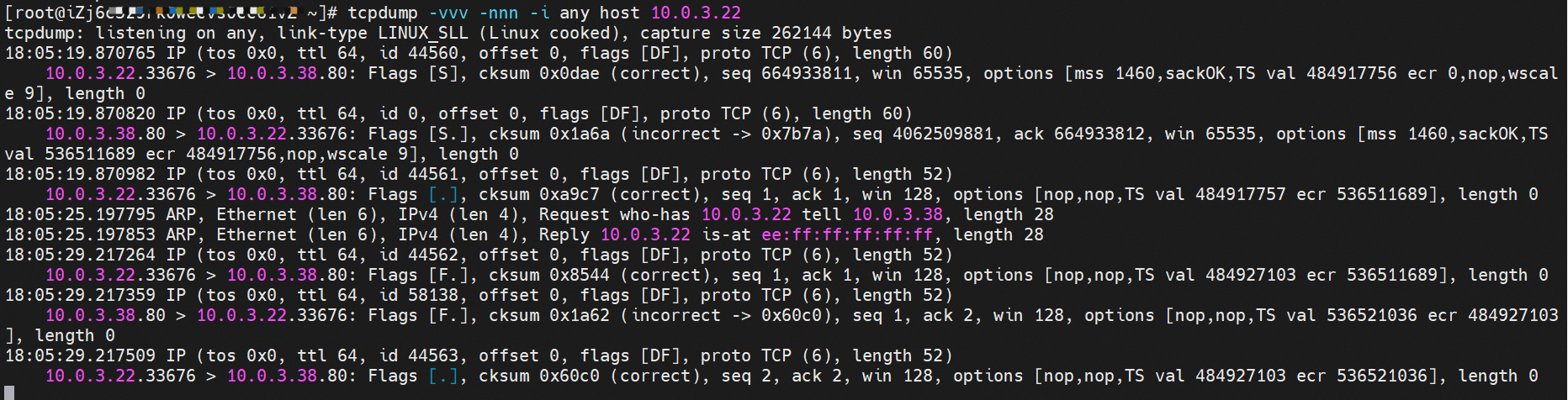

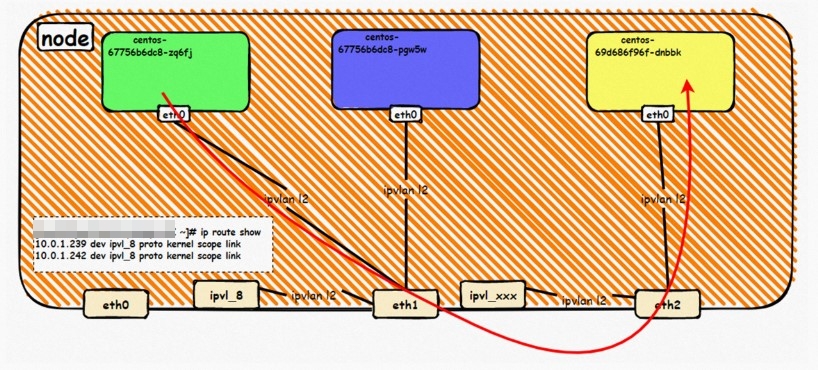

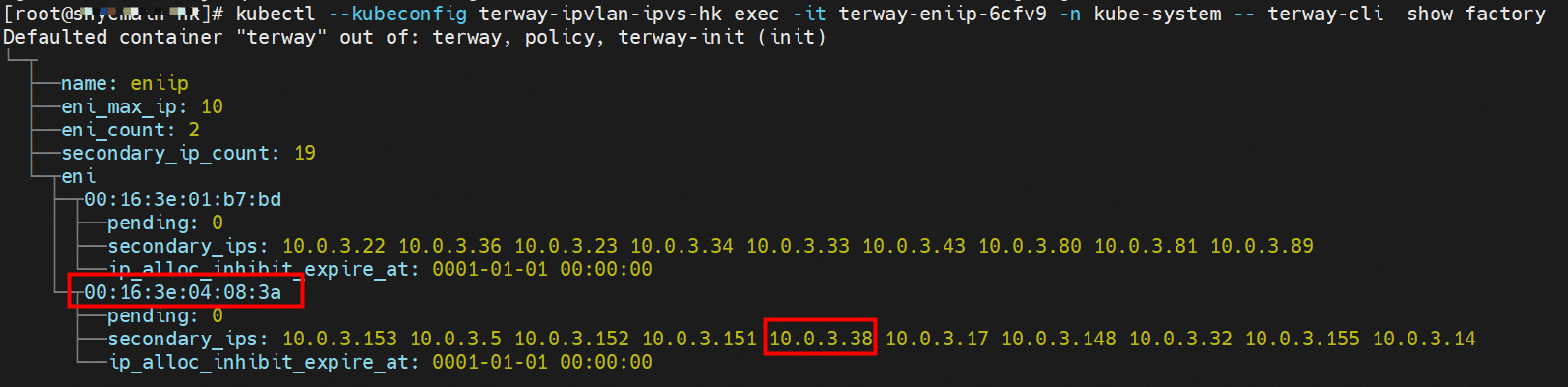

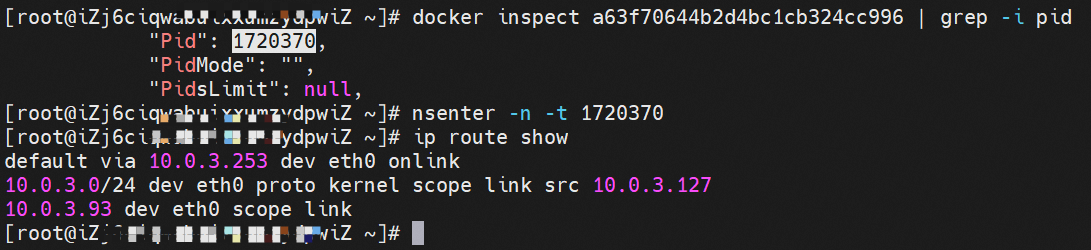

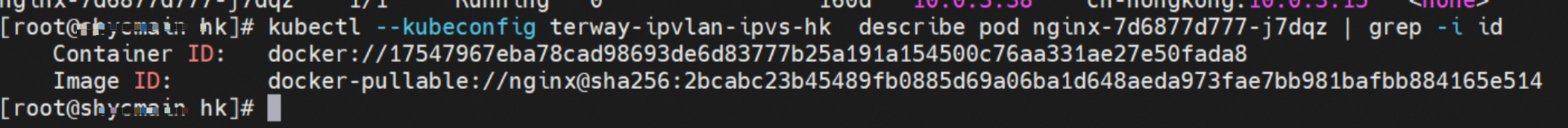

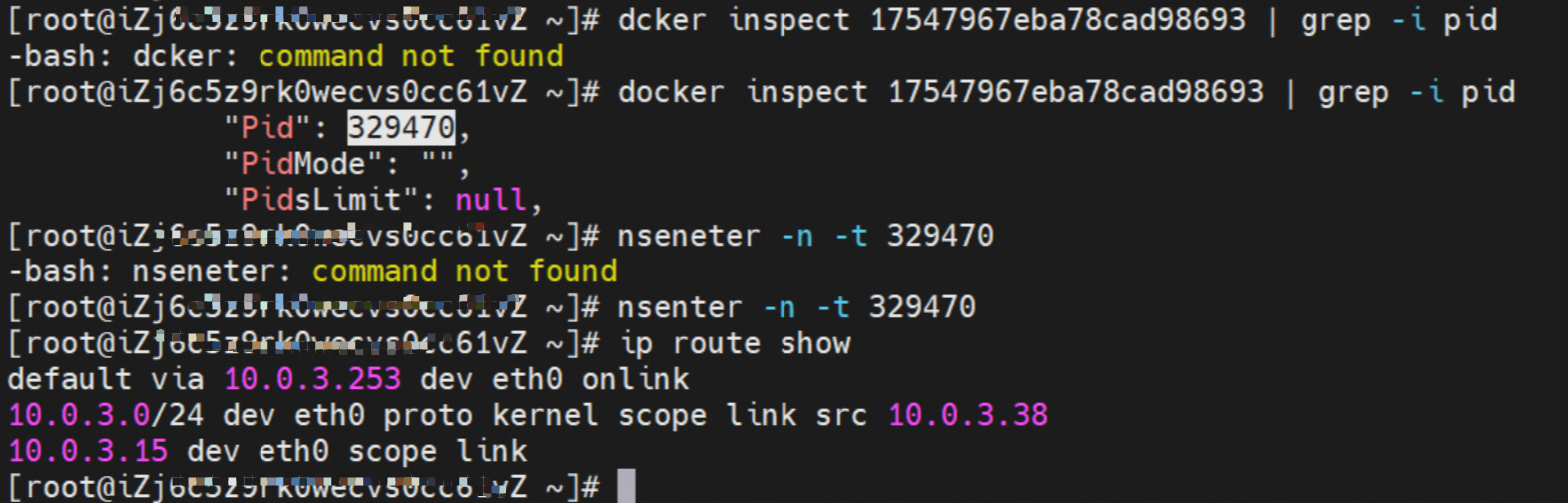

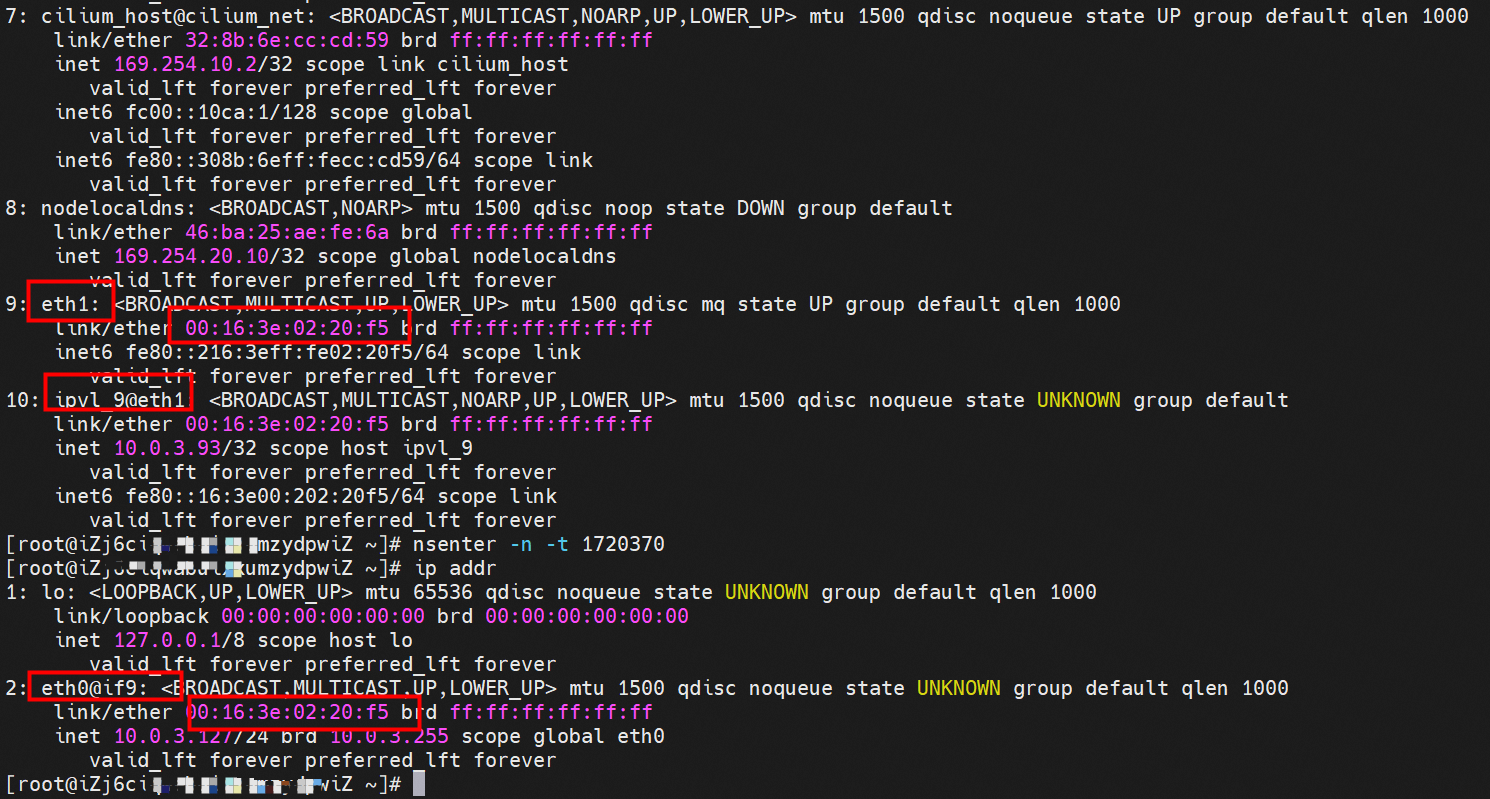

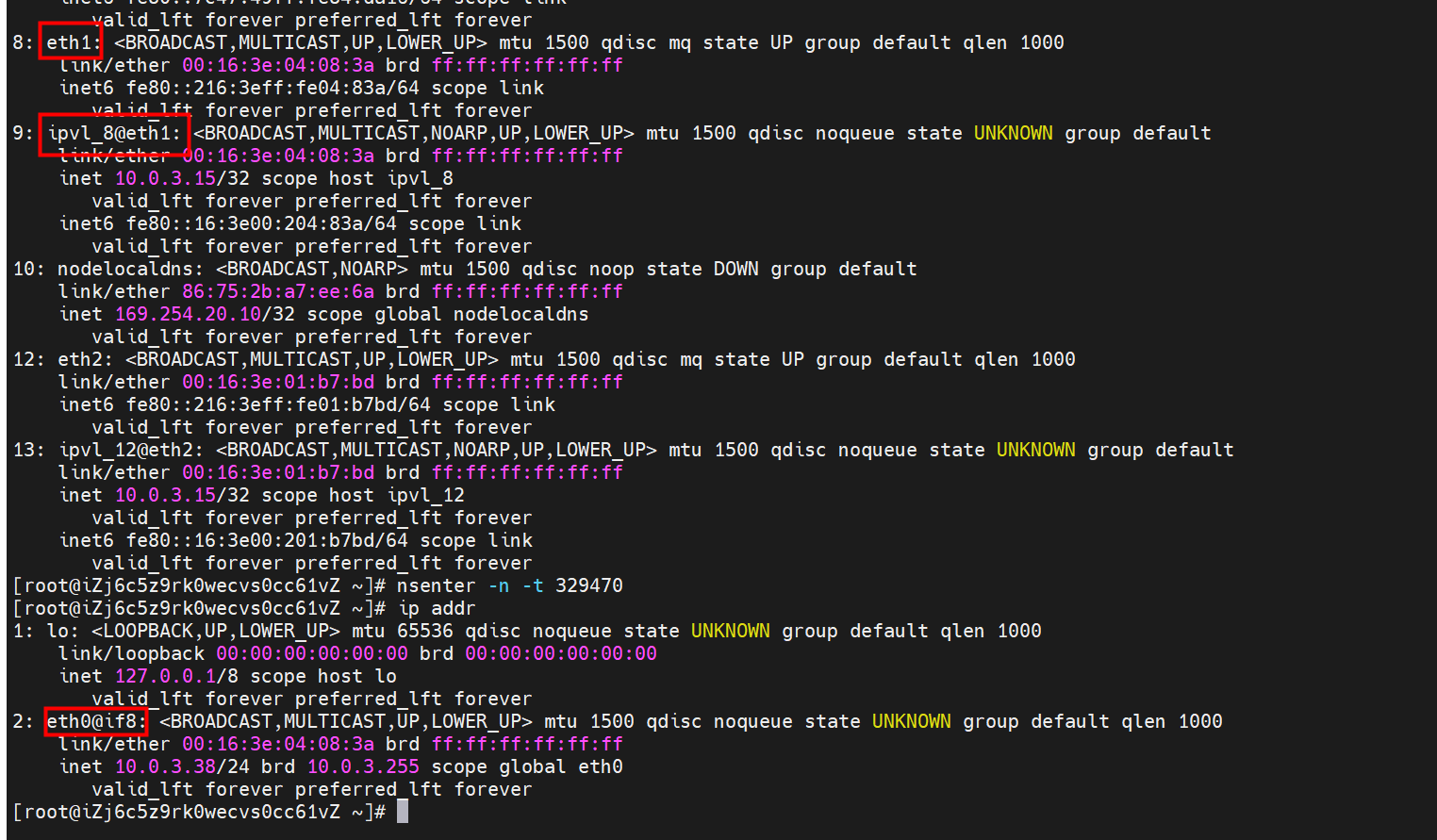

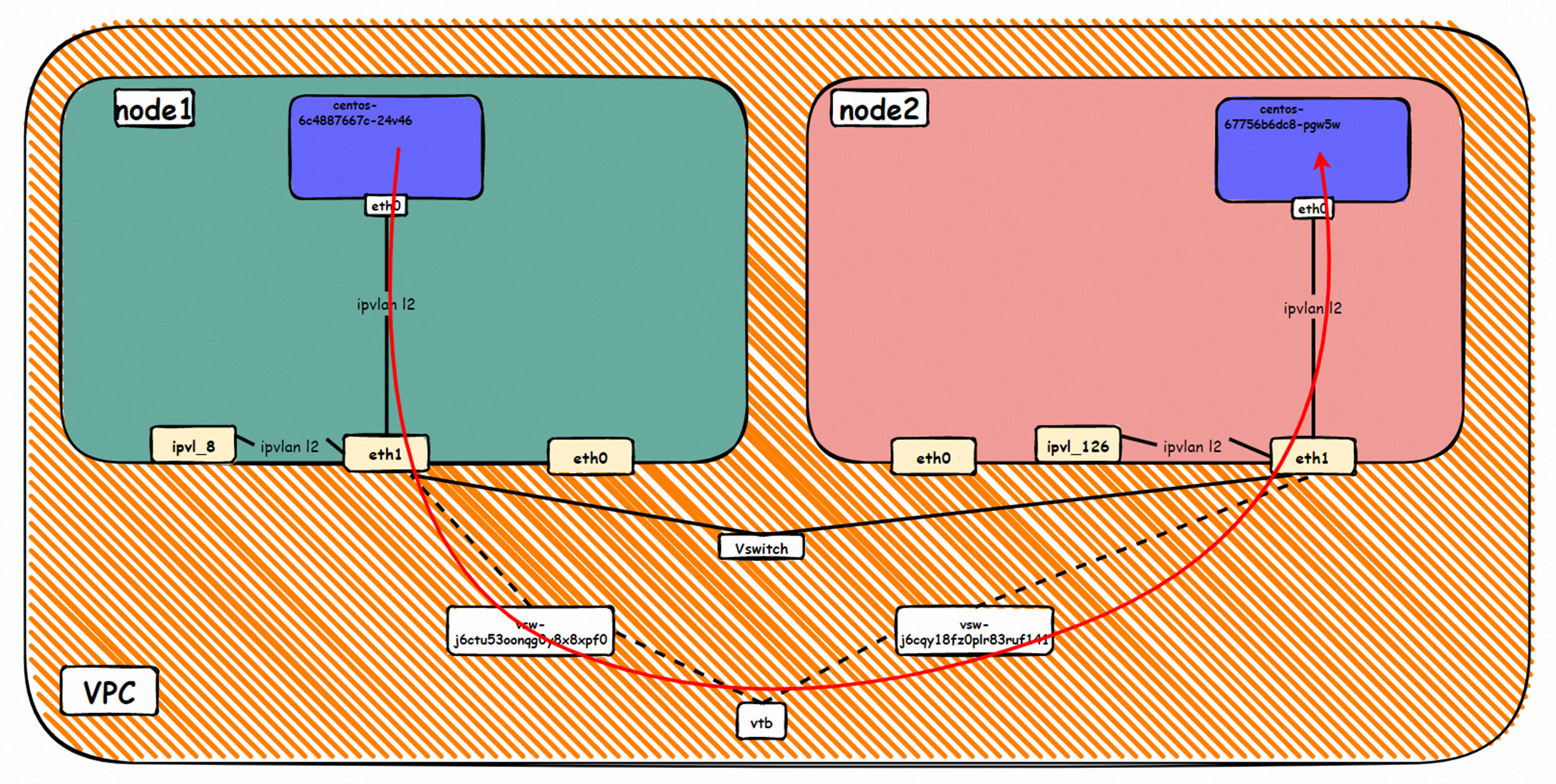

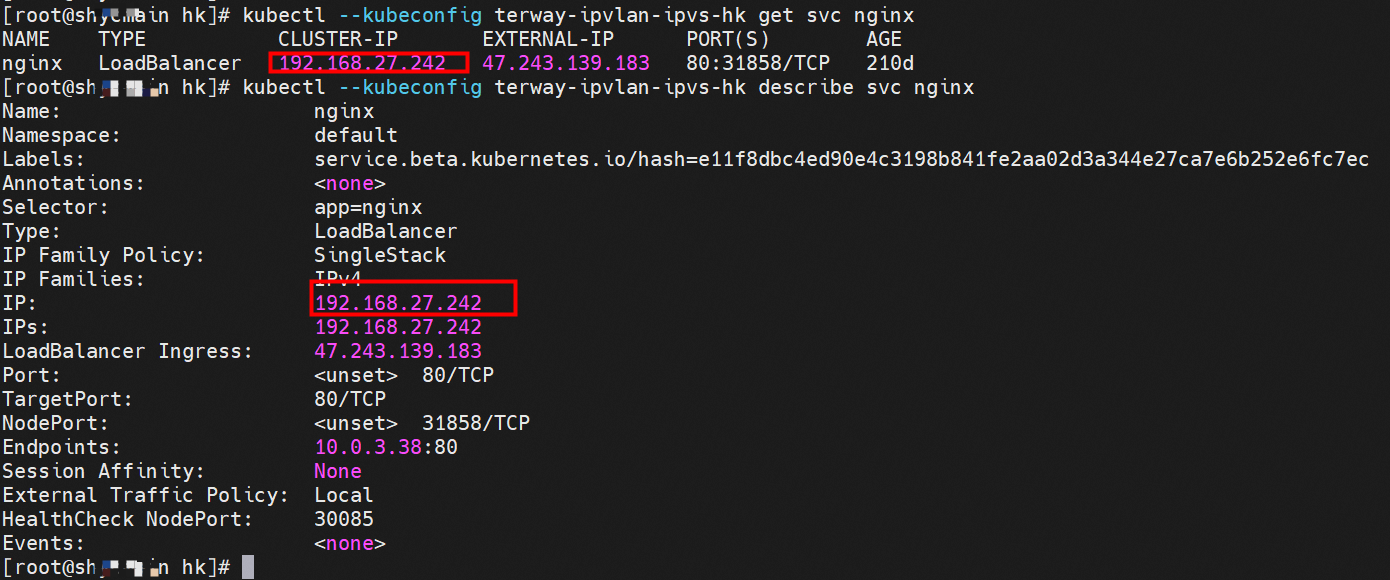

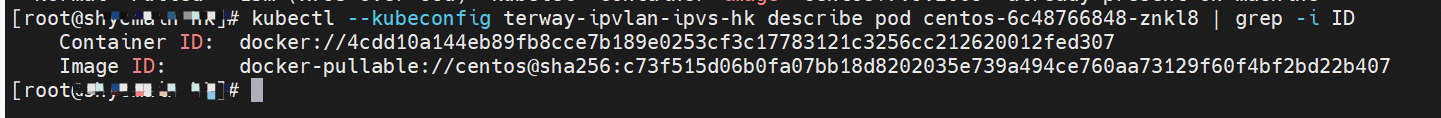

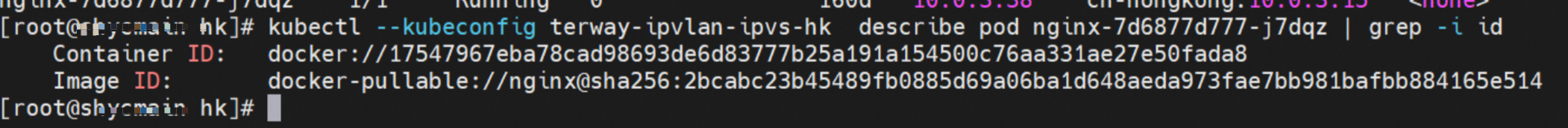

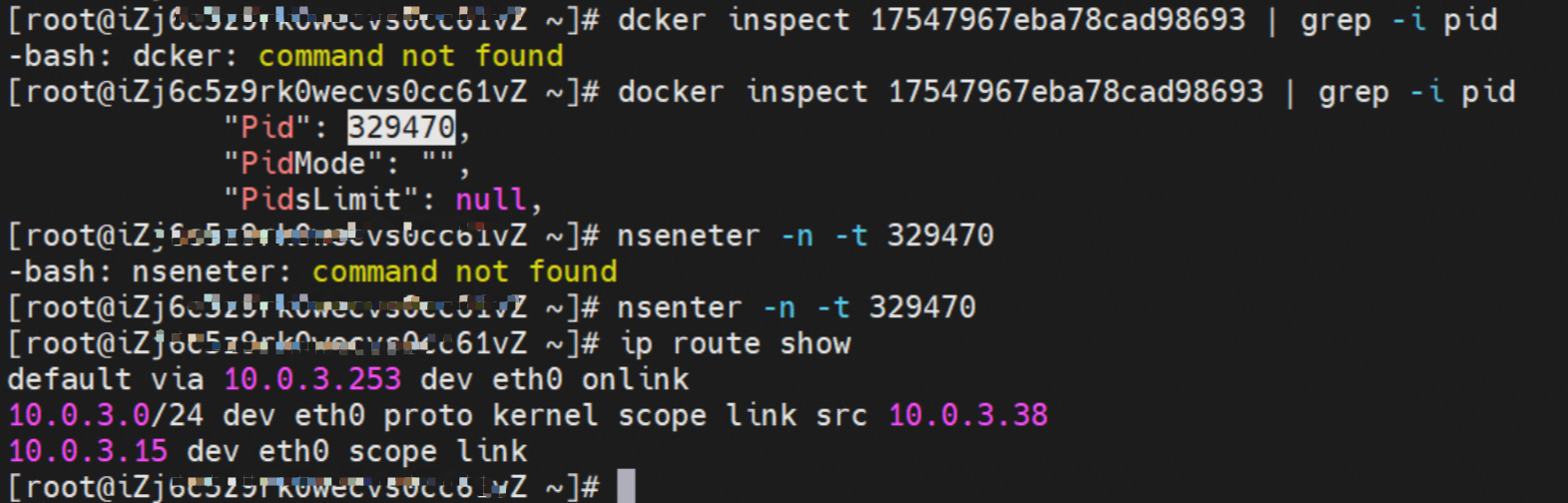

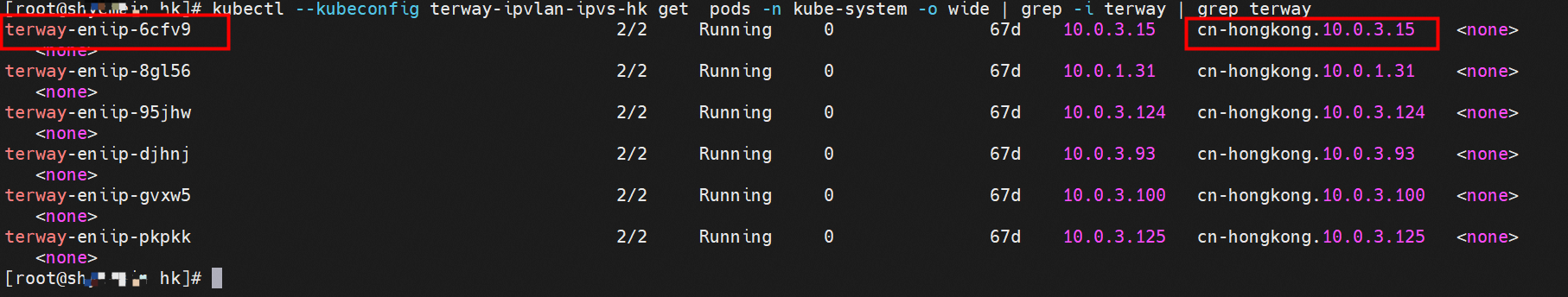

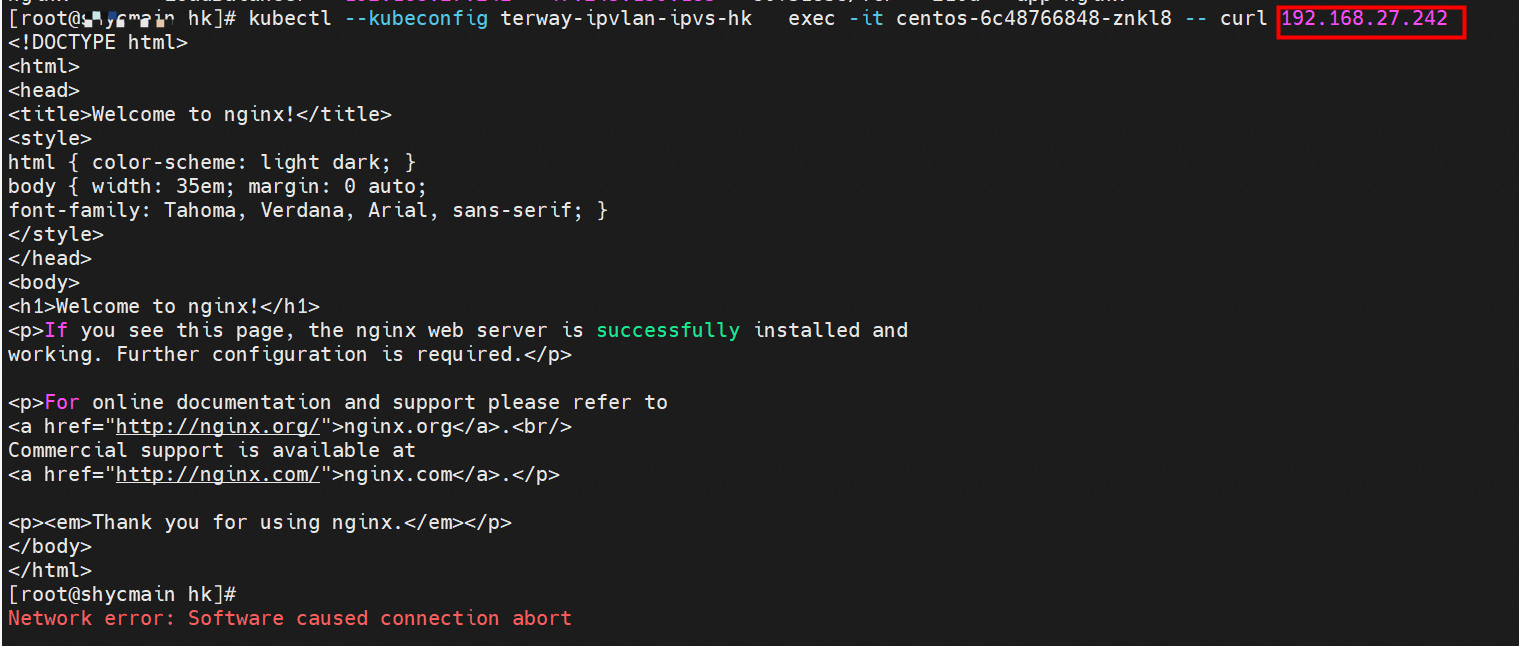

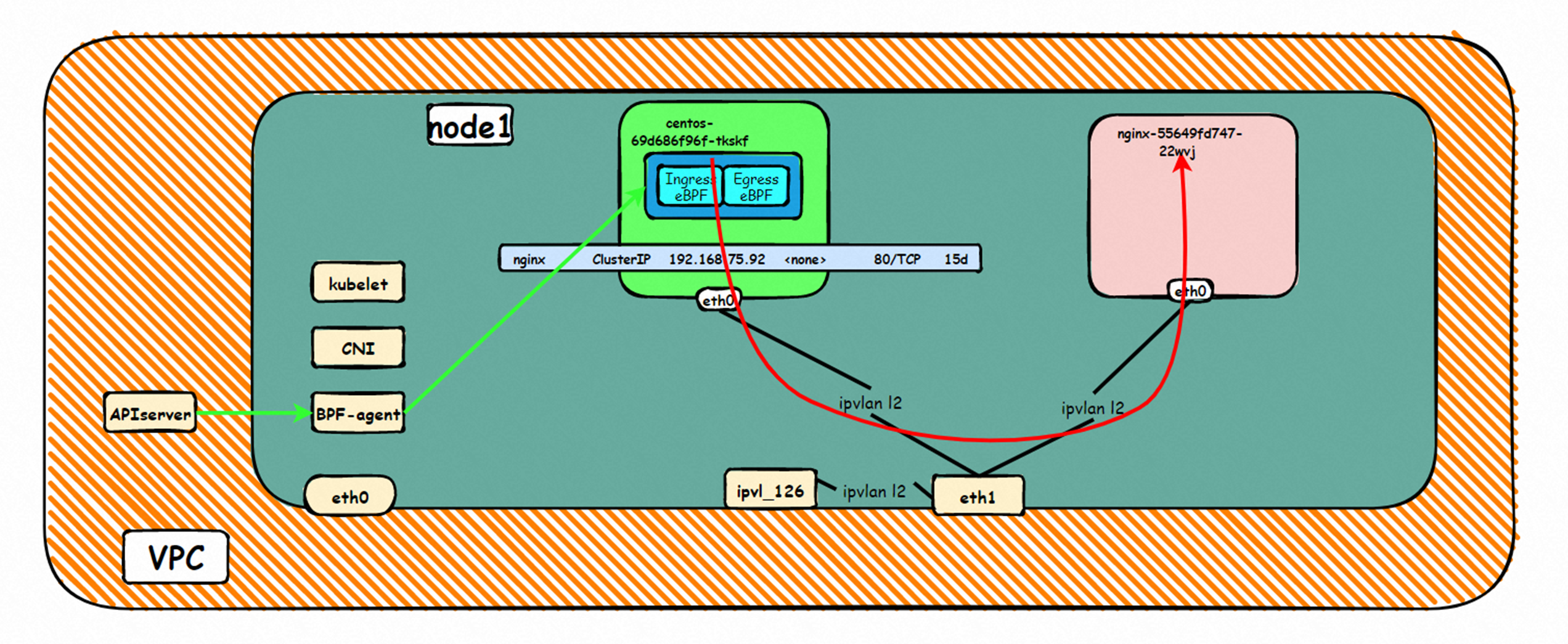

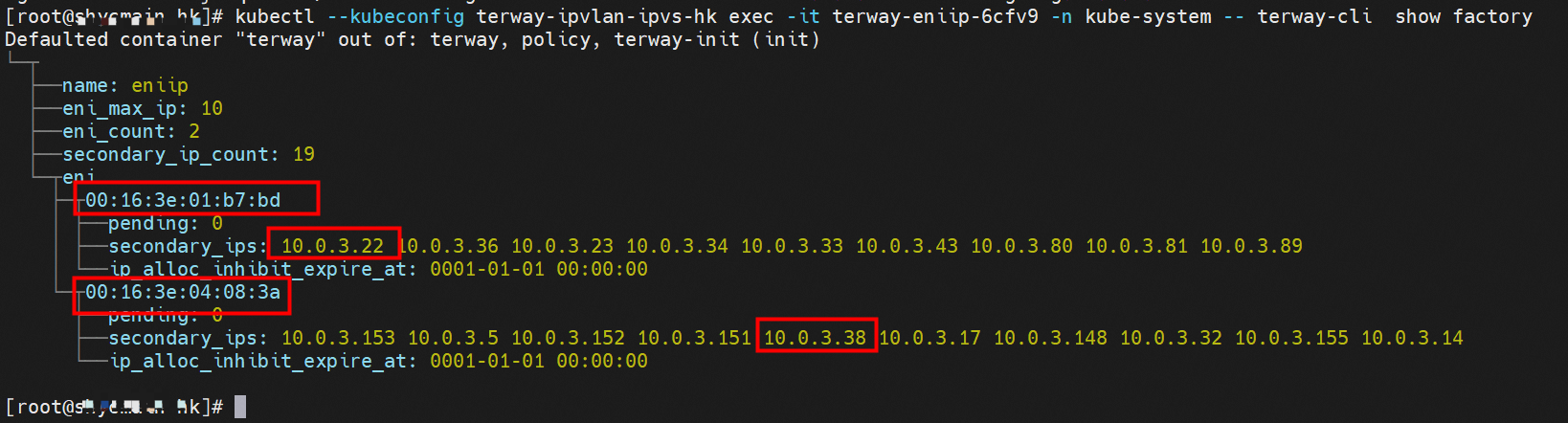

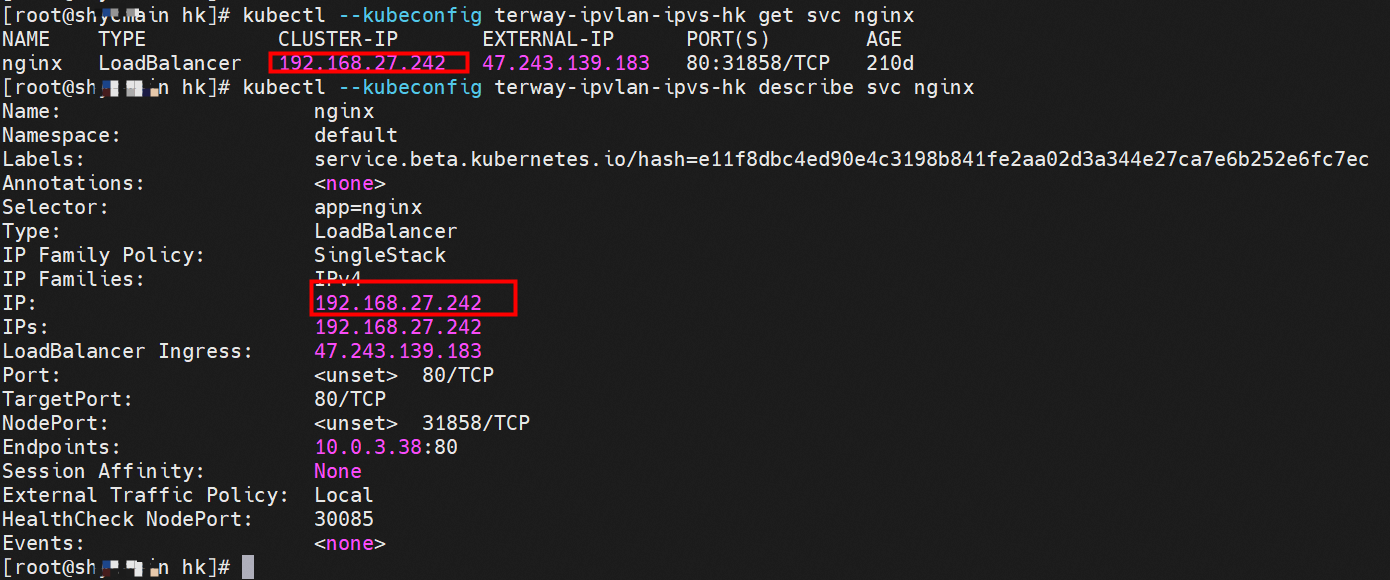

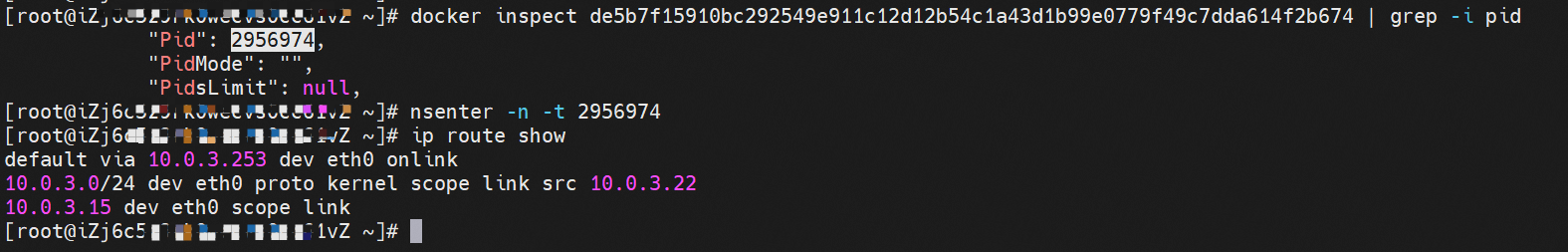

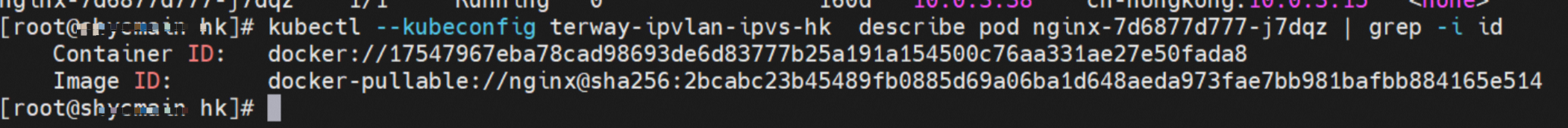

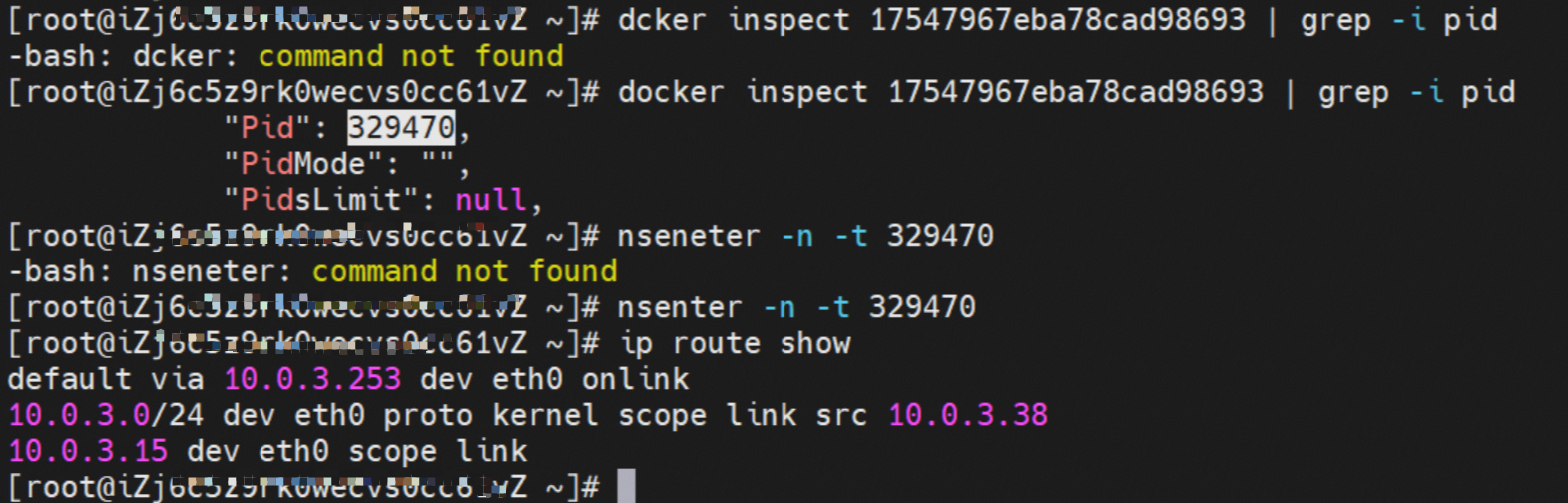

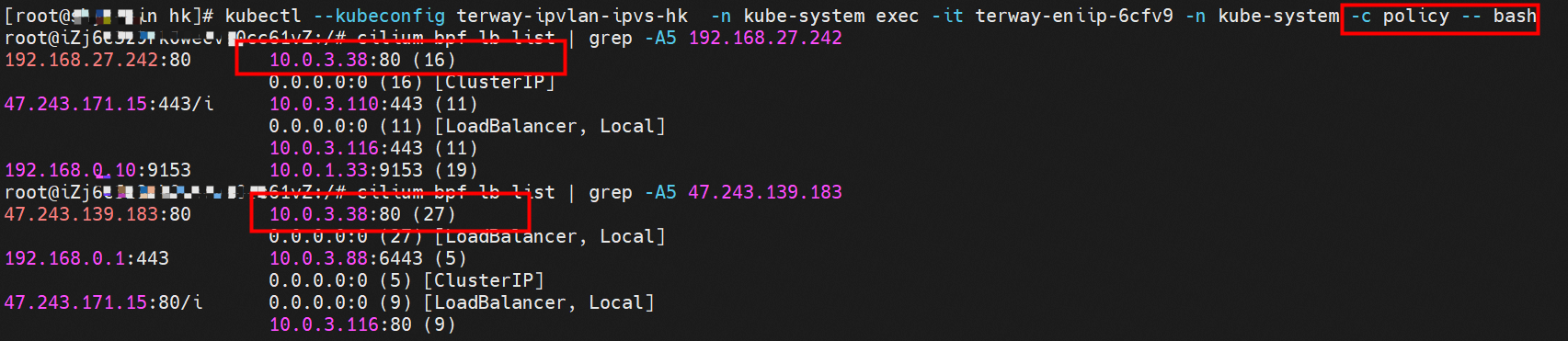

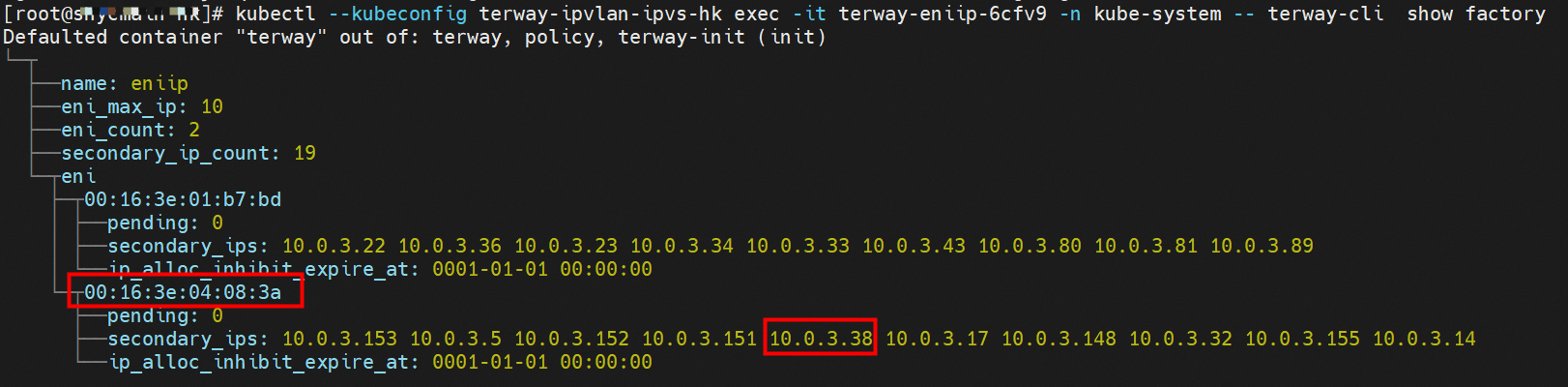

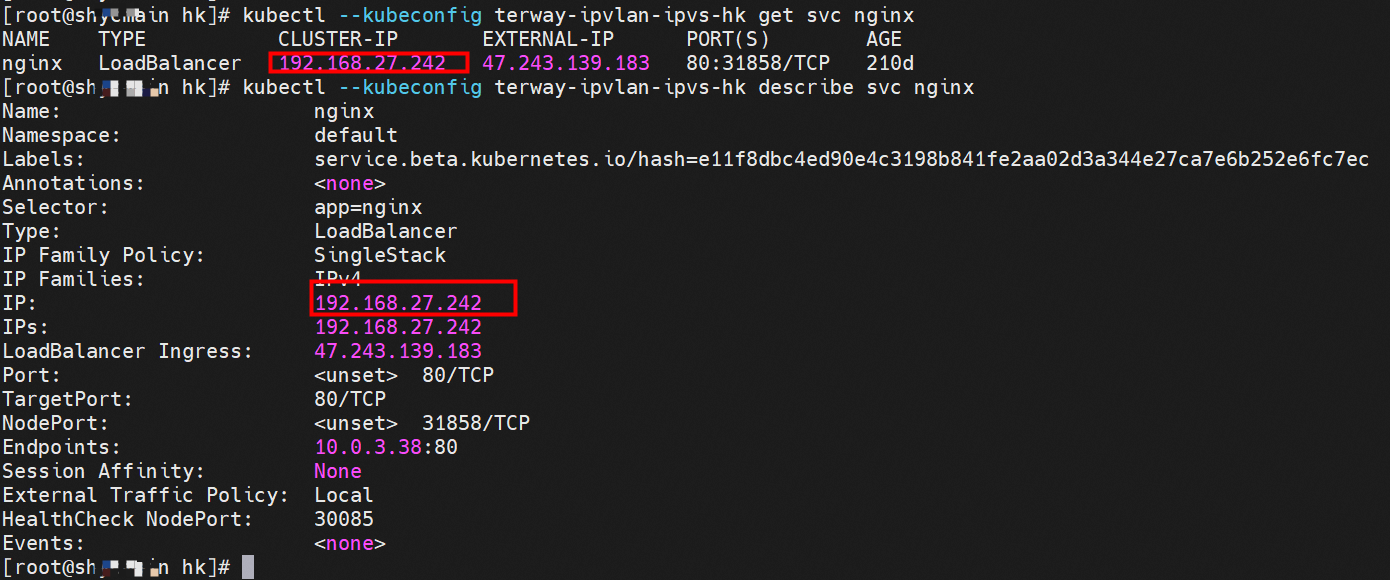

那么Pod是如何ECS OS进行通信呢?在OS层面,我们一看到ipvl_x的网卡,可以看到是附属于eth1的,说明在OS层面会给每个附属网卡创建一个ipvl_x的网卡,用于建立OS和Pod内的连接隧道。 so how does Pod communicate with ECS OS? At the OS level, we see an ipvl_x web card attached to eth1, indicating that an ipvl_x web card will be created at the OS level for each OS and Pod connection tunnel. ECS OS内对于数据流量是怎么判断去哪个容器呢? 通过OS Linux Routing 我们可以看到,所有目的是 Pod IP 的流量都会被转发到Pod对应的ipvl_x虚拟往卡上,到这里为止,ECS OS 和Pod的网络命名空间已经建立好完整的出入链路配置了。到目前为止介绍了IPVLAN在网络架构上实现了。 ECS OS how do you judge which containers are in the data flow? Through OS Linux Routing, we can see that all traffic intended for Pod IP will be forwarded to Pod's corresponding ipvl_x virtual card, where, until now, ECS OS and Pod's network naming space have been fully configured. IPVLAN has been introduced on the network architecture. 对于eni多IP的实现,这个类似于《全景剖析阿里云容器网络数据链路(三)—— Terway ENIIP》 原理,Terway Pod是通过daemonset的方式部署在每个节点上的,通过下面命令可以看到每个节点上的Terway Pod。通过terway-cli show factory 命令可以看到节点上的附属ENI数量、MAC地址以及每个ENI上的IP show factorly command can be seen at the subsidiary ENI, MAC addresses and 那么对于SVC来说,是如何实现的呢?看过前面 四个系列的朋友,应该知道对于Pod访问SVC,容器是利用各种办法将请求转发到Pod所在的ECS层面,由ECS内的netfilter模块来实现SVC IP的解析,这固然是个好办法,但是由于数据链路需要从Pod的网络命名空间切换到ECS的OS的网络命名空间,中间经过了2次内核协议栈,必然会产生性能损失,如果对高并发和高性能有机制追求,可能并不完全满足客户的需求。那么对于高并发和延迟敏感业务,该如何实现呢?有没有办法让Pod访问SVC直接在Pod的网络命名空间中就实现了后端解析,这样结合IPVLAN这样至实现了一次内核协议栈。在4.19版本内核中,ebpf的出现,很好的实现了这个需求,这里不对ebpf做过多说明,感兴趣的可以访问官方链接,小伙伴们只需要知道ebpf是一种可以安全在内核层面运行的安全沙盒,当触发内核的指定行为,ebpf设定程序会被执行。利用这个特性,我们可以实现在tc层面对访问SVC IP的数据包进行修改。 So how is this possible for SVC? Having seen the four previous series of friends, it is important to know that there is a loss of performance for Pod to access SVC, that containers may not fully meet the needs of customers if there is a mechanism to pursue high- and high-performance applications, and that SVC IP resolution by the Netfilter module within ECS is a good way to do this, but since the data link needs to be switched from Pod's network naming space to the OS's network naming space in the ECS, there is a loss of performance for Pod to two inner nuclear protocols, and if there is a mechanism to pursue high- and high-performance, the container may not fully meet the needs of customers. 例如,同上图,可以看到集群内有一个名为nginx的svc,clusterIP是192.168.27.242,后端pod IP是10.0.3.38. 通过cilium bpf lb list 可以看到在ebpf程序中对于clusterIP 192.168.27.242的访问会被转到10.0.3.38 这个IP上, 而Pod内只有一个默认路由。此处说明,IPVLAN+EBPF模式下,如果Pod访问SVC IP,SVCIP在Pod的网络命名空间内就会被ebpf转为某个SVC 后端pod的IP,之后数据链路被发出Pod。也就是说SVCIP只会在Pod内被捕获,在源端ECS,目的端Pod 和目的端的Pod所在ECS都无法被捕获到。那假如一个SVC后后段有100+ pod, 因为ebpf存在,Pod外无法捕获到SVCIP,所在一旦出现网络抖动,对于抓包该抓那个后端IP或该在哪个后端Pod出抓包呢?想一想,是不是一个非常头疼又无解的场景? 目前容器服务和AES共创了ACK Net-Exporter容器网络可观测性工具,可以针对此场景进行持续化的观测和问题判断。 For example, as can be seen in the previous graph, there is a svc called nginx, cluster IP is 192.168.27.242, and the back end Pod IP is 10.0.3.38. Access to the cluster IP 192.168.27.242 in the ebpf process will be transferred to this IP. This is a default route in Pod. If Pod visits SVC IP, SVCIP is in Pod's network naming space, it will be converted to an ebpf 故Terway IPVLAN+EBPF 模式总体可以归纳为: so the Terway IPVLAN+EBPF mode can generally be summarized as: 4.2以上内核中支持了ipvlan的虚拟网络,可以实现单个网卡虚拟出来多个子网卡用不同的IP地址,而Terway便利用了这种虚拟网络类型,将弹性网卡的辅助IP绑定到IPVlan的子网卡上来打通网络,使用这种模式使ENI多IP的网络结构足够简单,性能也相对veth策略路由较好。 4 kernels support ipvlan's virtual network, which enables individual web cards to virtualize multiple sub-net cards with different IP addresses, and Terway facilitates the use of this virtual network type, which binds the supported IP of the flexnet card to IPVlan's sub-net card, and uses this model to make the ENI multi-IP network structure simple enough to be able to perform relatively better than the veth strategy path. 节点访问pod 需要经过host 的协议栈,pod和pod 间访问 不经过host的 协议栈 node access to Pod requires a host, pod and pod access without a host agreement IPVLAN+EBPF模式下,如果Pod访问SVC IP,SVCIP在Pod的网络命名空间内就会被ebpf转为某个SVC 后端pod的IP,之后数据链路被发出Pod。也就是说SVCIP只会在Pod内被捕获,在源端ECS,目的端Pod 和目的端的Pod所在ECS都无法被捕获到。 IPVLAN+EBPF mode, if Pod accesss SVC IPs, SVCIPs in Pod's network naming space will be converted by ebpf to an IP of some SVC backend Pod, and the data link will be sent out. That is, SVCIPs will only be caught in Pod, at source ECS, the end Pod and the end of the destination Pod will not be captured in ECS. 针对容器网络特点,我们可以将Terway IPVLAN+EBPF模式下的网络链路大体分为以Pod IP对外提供服务和以SVC对外提供服务两个大的SOP场景,进一步细分,可以归纳为12个不同的小的SOP场景。 for the characteristics of the container network, we can divide the network links under the Terway IPVLAN+EBPF model into two large SOP scenes, one with Pod IP and one with SVC, which can be further broken down into 12 different small SOP scenes. 对这11个场景的数据链路梳理合并,这些场景可以归纳为下面12类典型的场景: to merge the data links of these 11 scenarios, which can be summarized into 12 typical scenarios: TerwayENI架构下,不同的数据链路访问情况下,可以总结归纳为为12类: TerwayENI, under different data links, can be summarized in 12 categories: 访问 Pod IP, 同节点访问Pod 访问Pod IP,同节点pod间互访(pod属于同ENI) 访问Pod IP,同节点pod间互访(pod属于不同ENI) 不同节点间Pod之间互访 interviews between Pods at different nodes 集群内Pod访问的SVC ClusterIP(含Terway版本≥1.2.0,访问ExternalIP),SVC后端Pod和客户端Pod配属同一个ENI SVC Cluster IP visited by Pod in the cluster (including Terway version 1.2.0 with access to ExternalIP), SVC backend Pod with client Pod with the same ENI 集群内Pod访问的SVC ClusterIP(含Terway版本≥1.2.0,访问ExternalIP),SVC后端Pod和客户端Pod配属不同ENI(同ECS) SVC Cluster IP visited by Pod in the cluster (including Terway version 1.2.0 with access to ExternalIP), SVC backend Pod with client Pod with different ENI (with ECS) 集群内Pod访问的SVC ClusterIP(含Terway版本≥1.2.0,访问ExternalIP),SVC后端Pod和客户端Pod不属于不同ECS SVC Cluster IP visited by Pod in the cluster (including Terway version 1.2.0 with access to ExternalIP), SVC backend Pod and client Pod do not belong to a different ECS 集群内Pod访问的SVC ExternalIP(Terway版本≤1.2.0),SVC后端Pod和客户端Pod配属同一个ENI SVC ExtranalIP accessed by Pod in the cluster (Terway version 1.2.0), SVC backend Pod and client Pod matches the same ENI 集群内Pod访问的SVC ExternalIP(Terway版本≤1.2.0),SVC后端Pod和客户端Pod配属不同ENI(同ECS) SVC ExtranalIP accessed by Pod in the cluster (Terway version 1.2.0), SVC backend Pod and client Pod matches different ENIs (with ECS) 集群内Pod访问的SVC ExternalIP(Terway版本≤1.2.0),SVC后端Pod和客户端Pod部署于不同ECS SVC ExtranalIP (Terway version 1.2.0), with SVC backend Pod and client Pod deployed in different ECS 集群外访问SVC ExternalIP to visit SVC ExtranalIP cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz 和 10.0.3.38 cn-hongkong10.03.15 exists at ginx-7d6877d777-j7dqz and 10.3.38 nginx-7d6877d777-j7dqz IP地址 10.0.3.38 ,该容器在宿主机表现的PID是329470,该容器网络命名空间有指向容器eth0的默认路由 nginx-7d6877d 777-j7dqz IP address 10.3.38, the packaging's performance in the host machine is PID 329470, and the container's network naming space has the default route pointing to packaging eth0 该容器eth0在ECS OS 内是通过ipvlan隧道的方式和ECS的附属ENI eth1建立的隧道,同时附属ENI eth1还有个虚拟的ipvl_8@eth1 网卡 The container eth0 was built through the ipvlan tunnel at ECS OS with ENI eth1 attached to ENI eth1 and a virtual ipvl_8@eth1 netcard 通过OS Linux Routing 我们可以看到,所有目的是 Pod IP 的流量都会被转发到Pod对应的ipvl_x虚拟往卡上,这样就建立完毕ECS和Pod之间的连接隧道了。 through OS Linux Roading we can see that all traffic intended for Pod IP will be forwarded to Pod's corresponding ipvl_x virtual pass card, thus completing the tunnel between ECS and Pod. 可以访问到目的端 to access the destination nginx-7d6877d777-zp5jg netns eth0 可以抓到数据包 nginx-7d6877d777-zp5jg nets eth0 can catch the data pack ECS 的 ipvl_8 可以抓到数据包 ECS's ipvl_8 can capture the data pack 数据链路转发示意图: Datalink forwarding diagram: 不会经过分配给pod的附属网卡 will not pass the access card assigned to Pod 整个链路是通过查找路由表进入ipvl_xxx不需要经过ENI The entire link is entered via a search log into ipvl_xx without needing to pass through ENI 整个请求链路是node -> ipvl_xxx -> ECS1 Pod1 the entire request link is node-> ipvl_xx-> ECS1 Pod1 cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz 和 centos-6c48766848-znkl8 两个pod, IP分别为 10.0.3.38 和 10.0.3.5 cn-hongkong10.03.15 exists at ninx-7d6877d777-j7dqz and centos-6c48766848-znkl8 two pods, IP 10.3.38 and 10.3.5 , respectively 通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 这两个IP (10.0.3.5 和10.0.3.38)都属于同一个MAC地址 00:16:3e:04:08:3a ,说明这两个IP属于同一个ENI,进而可以推断出nginx-7d6877d777-j7dqz 和 centos-6c48766848-znkl8 属于同一个ENI 网卡 Through this node's terway Pod, we can see that both IPs (10.0.3.5 and 10.03.38) belong to the same MAC address 00:16:3e:04:08:3a, indicating that the two IPs belong to the same ENI, and thus it can be deduced that nginx-7d6877d77-j7dqz and Centos-6c48766848-znkl8 belong to the same ENI card centos-6c48766848-znkl8 IP地址 10.0.3.5,该容器在宿主机表现的PID是2747933,该容器网络命名空间有指向容器eth0的默认路由, 有且只有一条,说明pod访问所有地址都需要通过该默认路由 centos-6c48766848-znkl8 IP address 10.0.3.5 PID performance of the container on the host machine is 2747933, the container network naming space has the default route pointing to the container eth0, and there is only one that indicates that Pod's access to all addresses needs to be by nginx-7d6877d777-j7dqz IP地址 10.0.3.38 ,该容器在宿主机表现的PID是329470,该容器网络命名空间有指向容器eth0的默认路由 nginx-7d6877d 777-j7dqz IP address 10.3.38, the packaging's performance in the host machine is PID 329470, and the container's network naming space has the default route pointing to packaging eth0 该容器eth0在ECS OS 内是通过ipvlan隧道的方式和ECS的附属ENI eth1建立的隧道,同时附属ENI eth1还有个虚拟的ipvl_8@eth1 网卡 The container eth0 was built in the ECS OS via the ipvlan tunnel with ENI eth1 attached to ENI eth1 and a virtual ipvl_8@eth1 net card 可以访问到目的端 to access the destination centos-6c48766848-znkl8 netns eth0 可以抓到数据包 centos-6c48766848-znkl8 netns eth0 can catch the data pack nginx-7d6877d777-zp5jg netns eth0 可以抓到数据包 nginx-7d6877d777-zp5jg nets eth0 can catch the data pack ipvl_8 网卡 并没有捕获到相关的数据流量包 ipvl_8 netcard does not capture the relevant data flow package 数据链路转发示意图: Datalink forwarding diagram: 不会经过分配给pod的附属网卡 will not pass the access card assigned to Pod 不会经过任何宿主机ECS的网络空间的中间节点 does not pass through the middle of any host ECS cyberspace/span> 整个链路不会和请求不会经过pod所分配的ENI,直接在OS 的ns中命中 Ip rule 被转发到对端pod The whole chain and the request will not pass through the ENI allocated by Pod, and Ip rule will be passed on to the end of the OS /b> 整个请求链路是 ECS1 Pod1 -> ECS1 pod2 (发生在ECS内部), 和IPVS相比,避免了calico网卡设备的两次转发,性能是更好的。 b> the entire request link is ECS1 Pod1-> ECS1 pod2 (occurring within ECS), which avoids two relays of calico netcard devices with better performance than IPVS. cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz 和 busybox-d55494495-8t677 两个pod, IP分别为 10.0.3.38 和 10.0.3.22 cn-hongkong10.03.15 exists at ninx-7d6877d777-j7dqz and Busybox-d55494495-8t677 two Pods, IP 10.3.38 and 10.3.22, respectively 通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 这两个IP (10.0.3.22 和10.0.3.38)都属于同一个MAC地址00:16:3e:01:b7:bd 和 00:16:3e:04:08:3a ,说明这两个IP属于不同ENI,进而可以推断出nginx-7d6877d777-j7dqz 和 busybox-d55494495-8t677 属于不同ENI 网卡 Through this node, I busybox-d55494495-8t677 IP地址 10.0.3.22 ,该容器在宿主机表现的PID是2956974,该容器网络命名空间有指向容器eth0的默认路由, 有且只有一条,说明pod访问所有地址都需要通过该默认路由 busybox-d55494495-8t677 IP address 10.3.22 The packaging displays a PID of 2956974 on the host, the container's network naming space has a default route to the container eth0, and there is, and there is only one, indicating that Pod's access to all addresses requires this default route by nginx-7d6877d777-j7dqz IP地址 10.0.3.38 ,该容器在宿主机表现的PID是329470,该容器网络命名空间有指向容器eth0的默认路由 nginx-7d6877d 777-j7dqz IP address 10.3.38, the packaging's performance in the host machine is PID 329470, and the container's network naming space has the default route pointing to packaging eth0 该容器eth0在ECS OS 内是通过ipvlan隧道的方式和ECS的附属ENI eth1建立的隧道,通过mac地址一样可以看到,nginx-7d6877d777-j7dqz 和 busybox-d55494495-8t677 分别被分配eth1和eth2 The eth0 in the ECS OS was created through the ipvlan tunnel and the ECS-affiliated ENI eth1 tunnel, as in the Mac address, nginx-7d6877d 777-j7dqz and Busybox-d55494495-8t677 were assigned to eth1 and eth2 respectively. 可以访问到目的端 to access the destination busybox-d55494495-8t677 netns eth0 可以抓到数据包 busybox-d55494495-8t 677 netns eth0 can catch the data pack nginx-7d6877d777-zp5jg netns eth0 可以抓到数据包 nginx-7d6877d777-zp5jg nets eth0 can catch the data pack 数据链路转发示意图: Datalink forwarding diagram: 不会经过分配给pod的附属网卡 will not pass the access card assigned to Pod 不会经过任何宿主机ECS的网络空间的中间节点 does not pass through the middle of any host ECS cyberspace/span> 整个链路是需要从客户端pod所属的ENI网卡出ECS再从目的POD所属的ENI网卡进入ECS the entire link is to get ECS out of the ENI card belonging to the client Pod and then enter the ENI card belonging to the target POD into ECS 整个请求链路是 ECS1 POD1 -> ECS1 eth1 -> VPC -> ECS1 eth2 -> ECS1 POD2 the entire request link is ECS1 PD1 - > ECS1 eth1 - > VPC - > ECS1 eth2 - > ECS1 cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz, IP分为 10.0.3.38 cn-hongkong10.03.15 exists at ginx-7d6877d777-j7dqz, IP divided into 10.3.38 cn-hongkong.10.0.3.93 节点上存在 centos-6c48766848-dz8hz, IP分为 10.0.3.127 cn-hongkong.10.3.93 Exists at Centos-6c48766848-dz8hz, IP divided into 10.3.127 通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 nginx-7d6877d777-j7dqz IP 10.0.3.5 属于cn-hongkong.10.0.3.15 上的 MAC地址 为00:16:3e:04:08:3a 的ENI网卡 Through this node, terway-cli show factory commands we can see nginx-7d6877d777-j7dqz IP10.3.5 belonging to

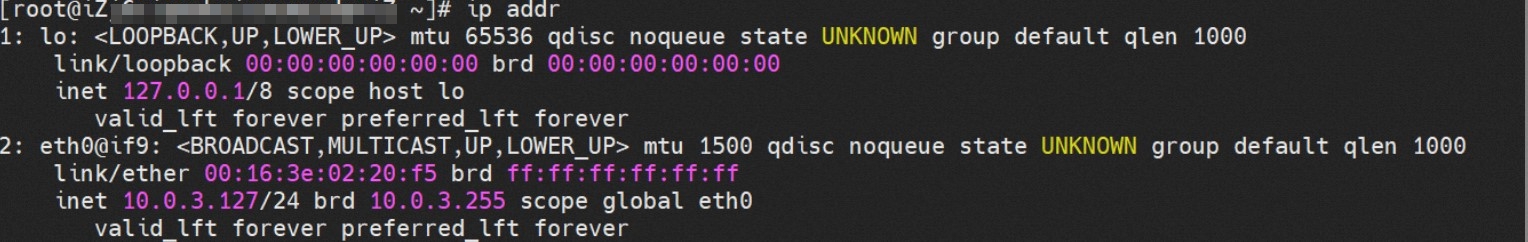

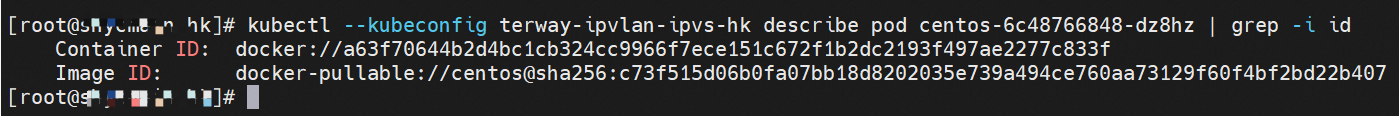

通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 centos-6c48766848-dz8hz IP 10.0.3.127 属于cn-hongkong.10.0.3.93 上的 MAC地址 为 00:16:3e:02:20:f5 的ENI网卡 Through this node, terway-cli show factory commands we can see centos-6c48766848-dz8hz IPcn-hongg.10.3.93 at 00:16:3e:02:f5) centos-6c48766848-dz8hz IP地址 10.0.3.127 ,该容器在宿主机表现的PID是1720370,该容器网络命名空间有指向容器eth0的默认路由, 有且只有一条,说明pod访问所有地址都需要通过该默认路由 centos-6c48766848-dz8hz IP address 10.3.3.27 The PID of the container in the host is 17370 nginx-7d6877d777-j7dqz IP地址 10.0.3.38 ,该容器在宿主机表现的PID是329470,该容器网络命名空间有指向容器eth0的默认路由 nginx-7d6877d 777-j7dqz IP address 10.3.38, the packaging's performance in the host machine is PID 329470, and the container's network naming space has the default route pointing to packaging eth0 ECS OS 内是通过ipvlan隧道的方式和ECS的附属ENI eth1建立的隧道,通过mac地址一样可以看到两个pod 分配的ENI地址 ECS OS tunnels built through the ipvlan tunnel with ECS-affiliated ENI eth1 and two Pod assigned

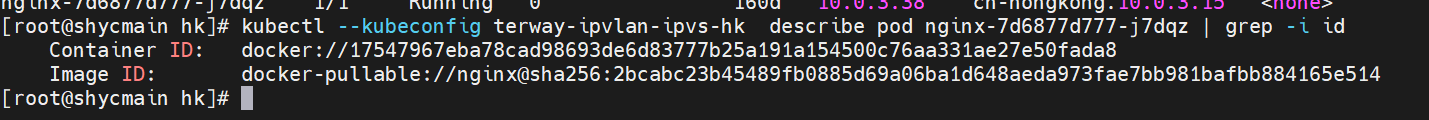

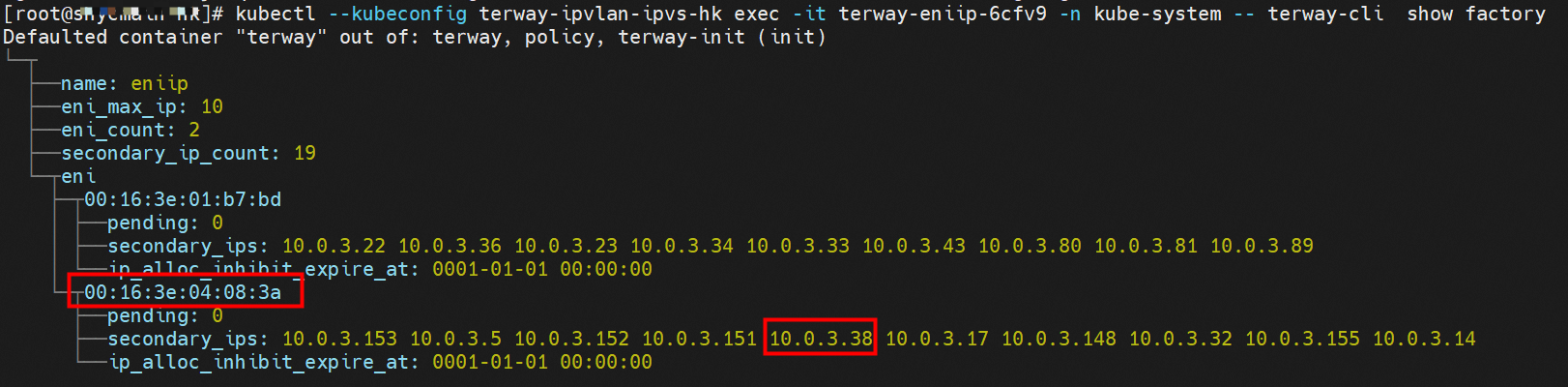

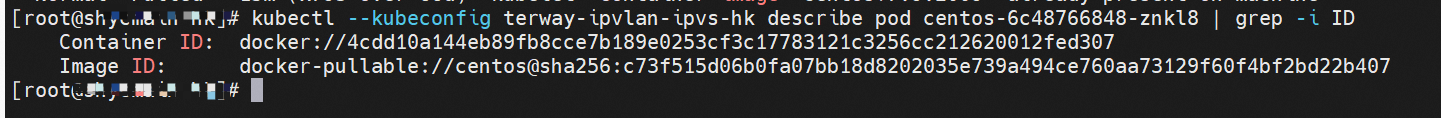

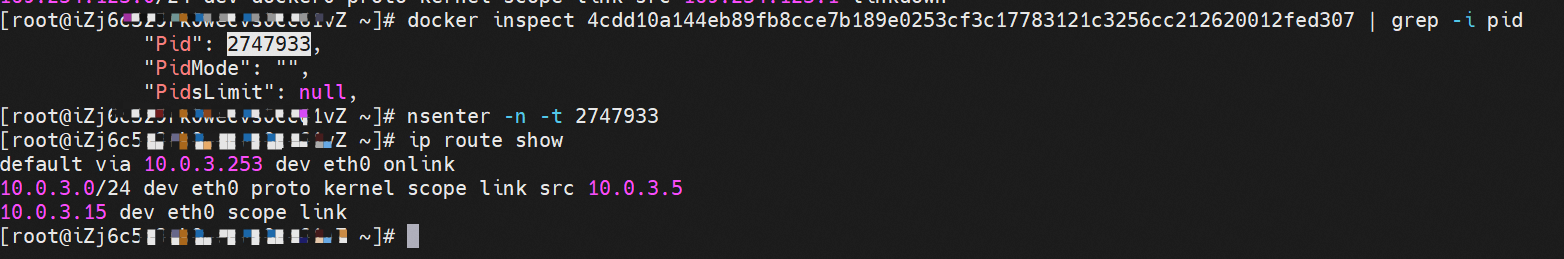

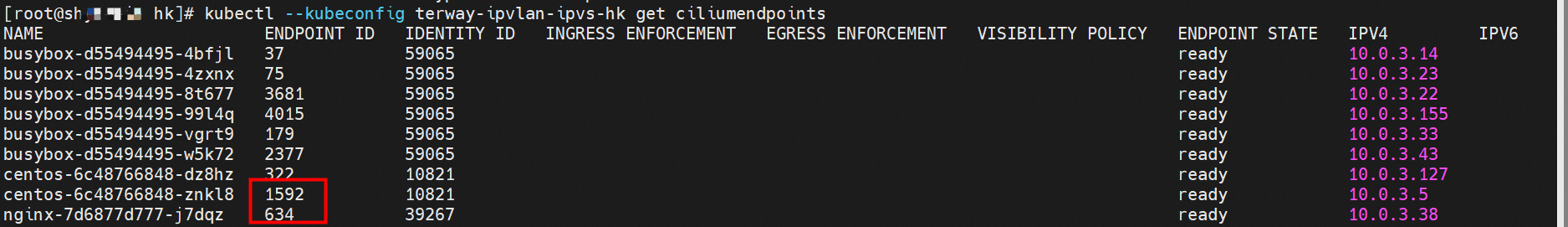

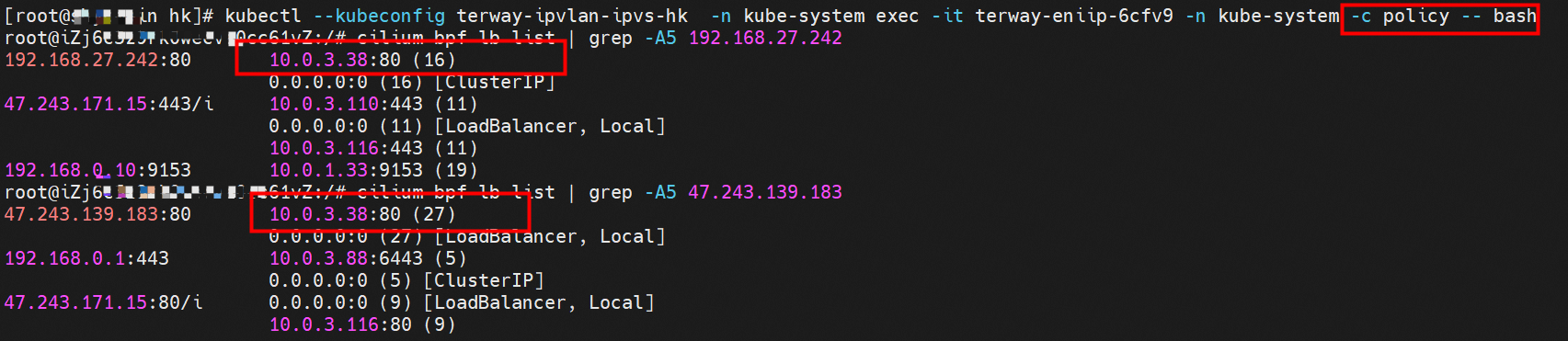

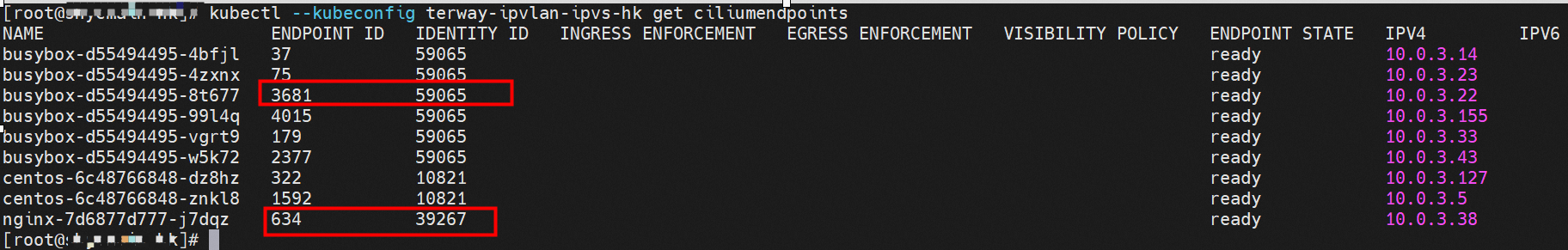

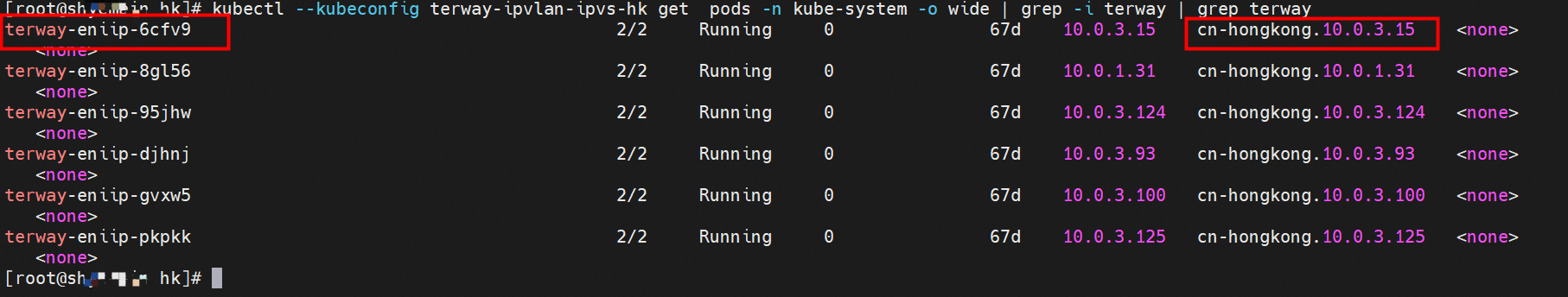

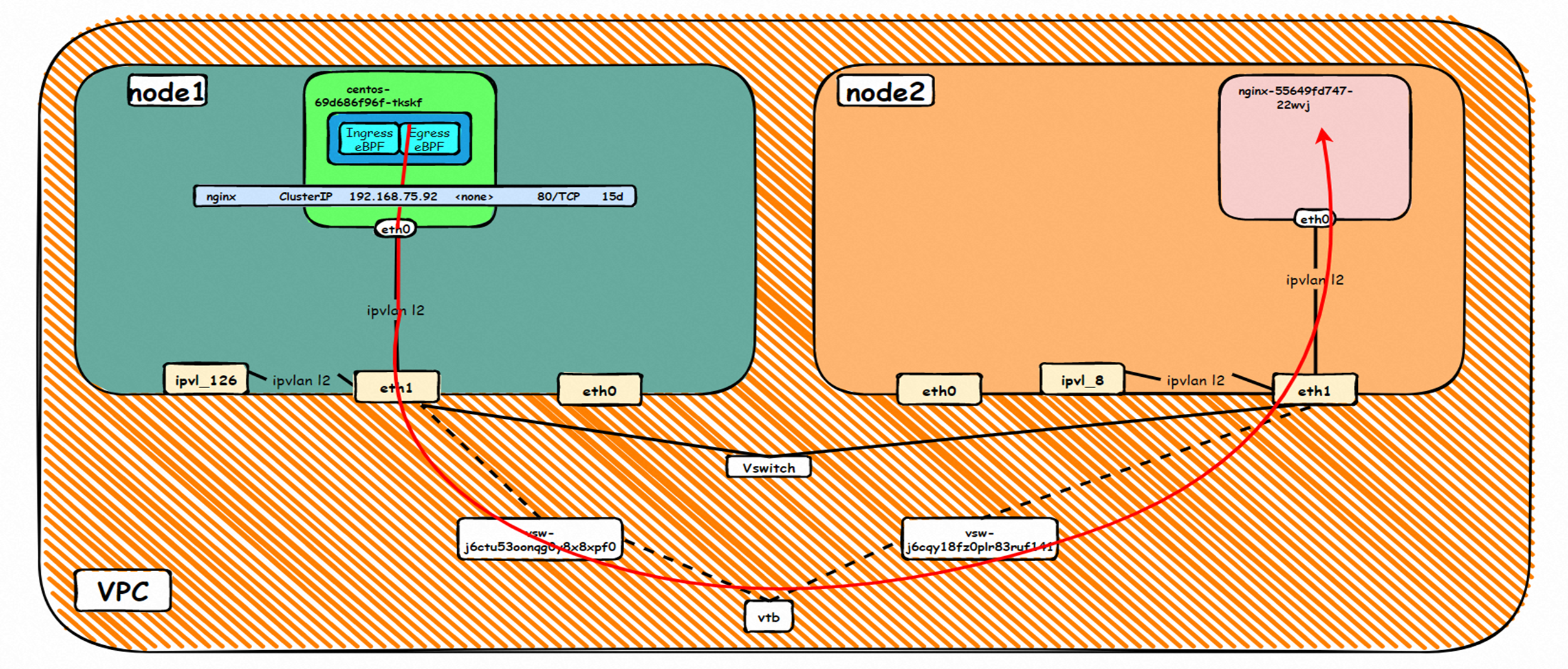

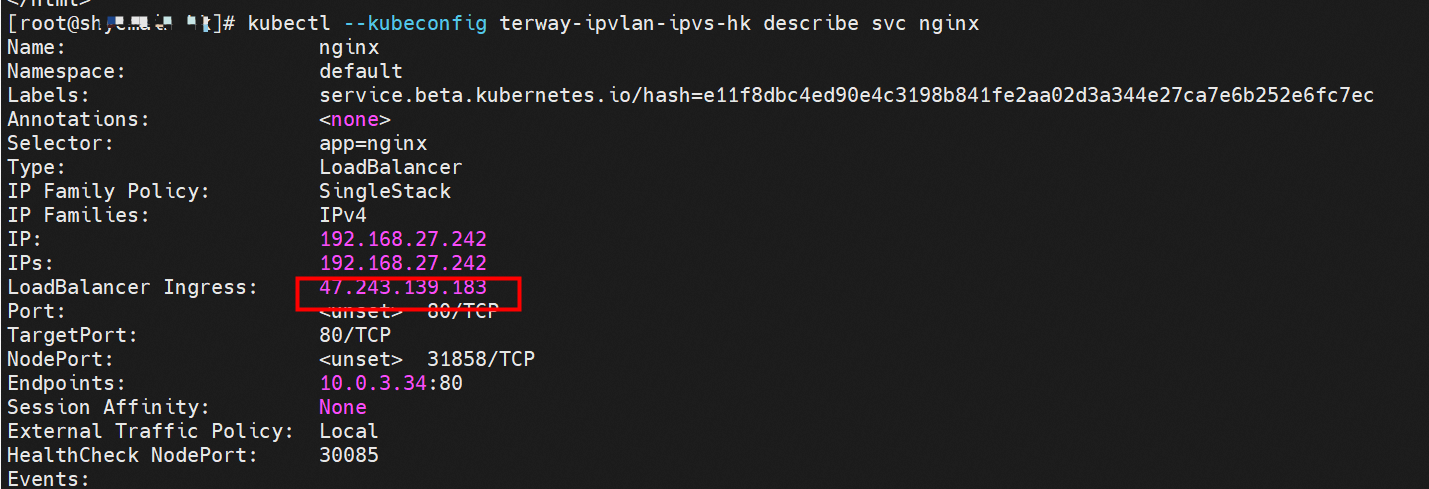

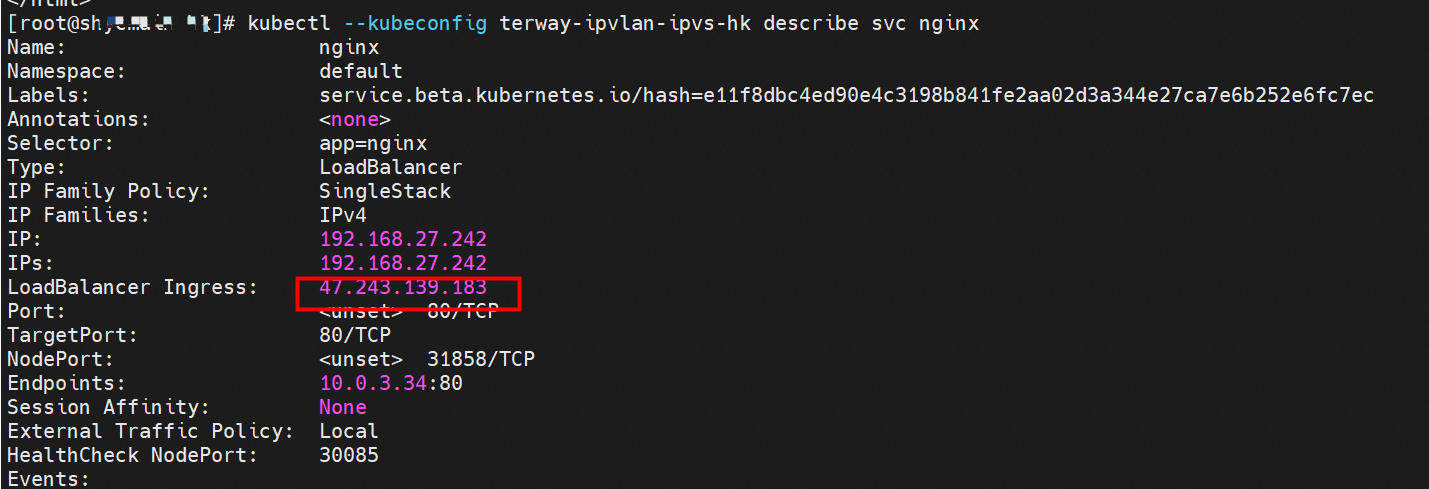

centos-6c48766848-dz8hz nginx-7d6877d777-j7dqz 可以访问到目的端 to access the destination 此处不再对抓包进行展示,从客户端角度,数据流可以在centos-6c48766848-dz8hz 的网络命名空间 eth0 ,以及 此pod所部署的ECS 对应的ENI eth1上可以被捕获到;从服务端角度,数据流可以在nginx-7d6877d777-j7dqz 的网络命名空间 eth0 ,以及 此pod所部署的ECS对应的ENI eth1上可以被捕获到。 The grab bag is no longer displayed here. From the client's perspective, the data stream can be captured on eth0 in the network name space of centos-6c48766848-dz8hz and ENI eth1 for the ECS deployed in this pod; the data stream can be captured on ginx-7d6877d77-j7dqz web name space eth0, and for ENI eth1 for the ECS deployed in this pod. 数据链路转发示意图: Datalink forwarding diagram: 不会经过任何宿主机ECS的网络空间的中间节点 does not pass through the middle of any host ECS cyberspace/span> 整个链路是需要从客户端pod所属的ENI网卡出ECS再从目的POD所属的ENI网卡进入ECS the entire link is to get ECS out of the ENI card belonging to the client Pod and then enter the ENI card belonging to the target POD into ECS 整个请求链路是 ECS1 POD1 -> ECS1 ethx -> VPC -> ECS2 ethy -> ECS2 POD2 the entire request link is ECS1 PD1-> ECS1 ethx-> VPC-> ECS2 ethy-> ECS2 PD2 cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz 和 centos-6c48766848-znkl8 两个pod, IP分别为 10.0.3.38 和 10.0.3.5 cn-hongkong10.03.15 exists at ninx-7d6877d777-j7dqz and centos-6c48766848-znkl8 two pods, IP 10.3.38 and 10.3.5 , respectively 通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 这两个IP (10.0.3.5 和10.0.3.38)都属于同一个MAC地址 00:16:3e:04:08:3a ,说明这两个IP属于同一个ENI,进而可以推断出nginx-7d6877d777-j7dqz 和 centos-6c48766848-znkl8 属于同一个ENI 网卡 Through this node's terway Pod, we can see that both IPs (10.0.3.5 and 10.03.38) belong to the same MAC address 00:16:3e:04:08:3a, indicating that the two IPs belong to the same ENI, and thus it can be deduced that nginx-7d6877d77-j7dqz and Centos-6c48766848-znkl8 belong to the same ENI card 通过describe svc 可以看到 nginx pod 被加入到了 svc nginx 的后端。 SVC 的CLusterIP是192.168.27.242。如果是集群内访问External IP, 对于 Terway 版本≥ 1.20 来说,集群内访问SVC的ClusterIP或External IP,整个链路架构是一致的,此小节不在针对External IP单独说明,统一用ClusterIP作为示例(Terway版本< 1.20 情况下,访问External IP,会在后续小节说明)。 As can be seen from describe svc, ginx pod has been added to the backend of svc nginx. SVC's CLusterIP is 192.168.27.242. In the case of an intracluster access to an External IP, for an inter-cluster access to SVC's Cluster IP or External IP, the entire chain structure is consistent, and this section is not singled out for an External IP, with Cluster IP as an example (Terway's version < 1.20, access to an External IP will be described in a subsequent subsection). centos-6c48766848-znkl8 IP地址 10.0.3.5,该容器在宿主机表现的PID是2747933,该容器网络命名空间有指向容器eth0的默认路由, 有且只有一条,说明pod访问所有地址都需要通过该默认路由 centos-6c48766848-znkl8 IP address 10.0.3.5 PID performance of the container on the host machine is 2747933, the container network naming space has the default route pointing to the container eth0, and there is only one that indicates that Pod's access to all addresses needs to be by nginx-7d6877d777-j7dqz IP地址 10.0.3.38 ,该容器在宿主机表现的PID是329470,该容器网络命名空间有指向容器eth0的默认路由 nginx-7d6877d 777-j7dqz IP address 10.3.38, the packaging's performance in the host machine is PID 329470, and the container's network naming space has the default route pointing to packaging eth0 在ACK中,是利用cilium去调用ebpf的能力,可以通过下面的命令可以看到 nginx-7d6877d777-j7dqz 和 centos-6c48766848-znkl8 identity ID 分别是 634 和 1592 In ACK, using the ability of cilium to call ebpf, ginx-7d6877d777-j7dqz and centos-6c48766848-znkl8 ID are 634 and 1592, respectively. 通过centos-6c48766848-znkl8 pod,可以找到此pod所在的ECS的Terway pod为terway-eniip-6cfv9,在Terway Pod 中运行下面的cilium bpf lb list | grep -A5 192.168.27.242 命令可以看到 ebpf中对于CLusterIP 192.168.27.242:80 记录的后端是 10.0.3.38:80 。这上述的一切都是通过EBPF 记录到了 源端Pod centos-6c48766848-znkl8 pod 的 tc中。 by centos-6c48766848-znkl8 pod, you can find Terway Pod of the ECS where Pod is located as terway-eniip-6cfv9, running the following cilium bpf lb list grep -A512668.27422 >. All of the above has been recorded in the tc at the end of the EBPF for CLusterIP 192168.27.242:80 at 10.3.38:80. 通过以上,可以理论推断出,如果集群内的pod访问 SVC的CLusterIP or External IP地址(Terway ≥ 1.20), 数据流会在pod 的网络命名空间内就被转化为相应的SVC 的后端 pod IP后,再被从Pod 网络命名空间的eth0 发出pod,进入到pod所在的ECS,然后通过IPVLAN隧道,转发到同ECS或通过相应的ENI出ECS。 也就是说,我们如果抓包,不管在pod内抓包还是在ECS抓包,都无法捕获到SVC 的IP,只能捕获到Pod IP。

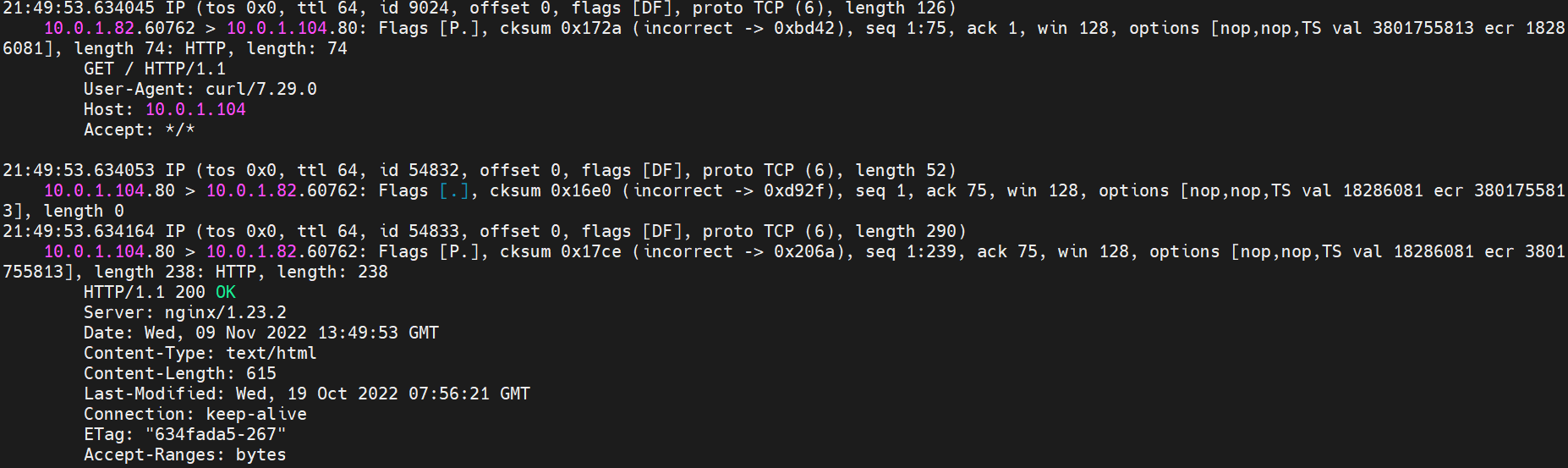

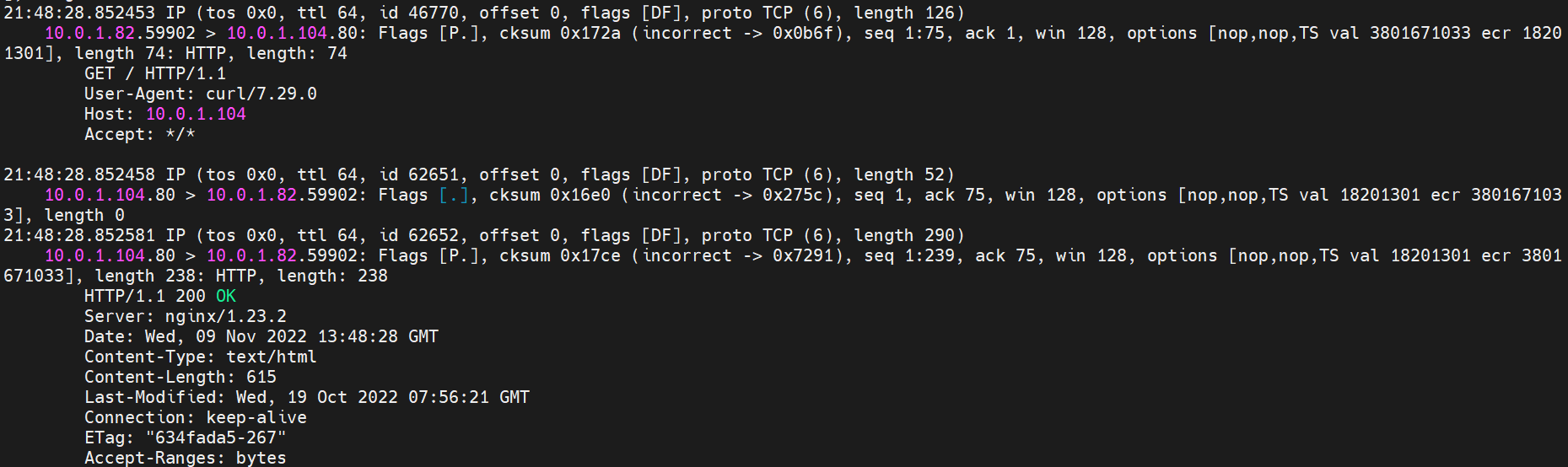

EBPF技术让集群内访问避开了ECS OS内部的内核协议栈和减少了部分Pod 内核协议栈,大大提高了网络性能和Pod密度,带来了不弱于 单独ENI的网络性能,但是此方式会对我们观测带来巨大的改变和影响。 试想一下,如果您的集群内存在互相调用情况,这个调用的IP 是SVC 的IP,加入此SVC后端所引用的Pod有几十上百个。 源端pod调用时候出现问题,一般情况下报错是 ‘connect to <svc IP> failed’ 等类似信息,传统的抓包手段是在源端Pod内,目的Pod, ECS上等进行抓包,筛选 SVC IP 来串起来不同包之间的同一个数据流,可是ebpf情况下由于上述技术实现,造成无法捕获SVC IP,是不是对偶发抖动情况下的观测带来了巨大挑战呢? EBPF technology allows the cluster to access the inner nuclear kiosks within the ECS OS and to reduce some of the Pod inner nuclear kiosks, significantly increasing network performance and density, resulting in not less than the individual ENI’s network performance, but this approach will bring great change and impact to our observations. Imagine that, if there are interlocking calls in your cluster, the IP to be used is the IP to SVC, the Pod quoted at the back end of this SVC has hundreds of names. 可以访问到目的端 to access the destination 从客户端Pod centos-6c48766848-znkl8 访问SVC , 我们可以看到访问成功 access SVC from client Podcentos-6c48766848-znkl8 客户端的centos-6c48766848-znkl8 网络命名空间内 eth0 抓包,抓包地址是目的SVC 的IP 和 SVC 的后端POD IP。 可以看到只能抓到SVC 后端Pod IP,无法捕获到SVC IP。 Client centos-6c48766848-znkl8net namespace eth0 grabs with IPs for SVCs and POD IPs for SVCs backends.

目的端SVC的后端POD nginx-7d6877d777-zp5jg 网络命名空间 eth0 抓包,抓包地址是目的SVC 的IP 和 客户端 POD IP。 可以看到只能抓到客户端Pod IP。 POD nginx-7d6877d777-zp5jg web namespace eth0 grabs the IP and POD IP for SVC.

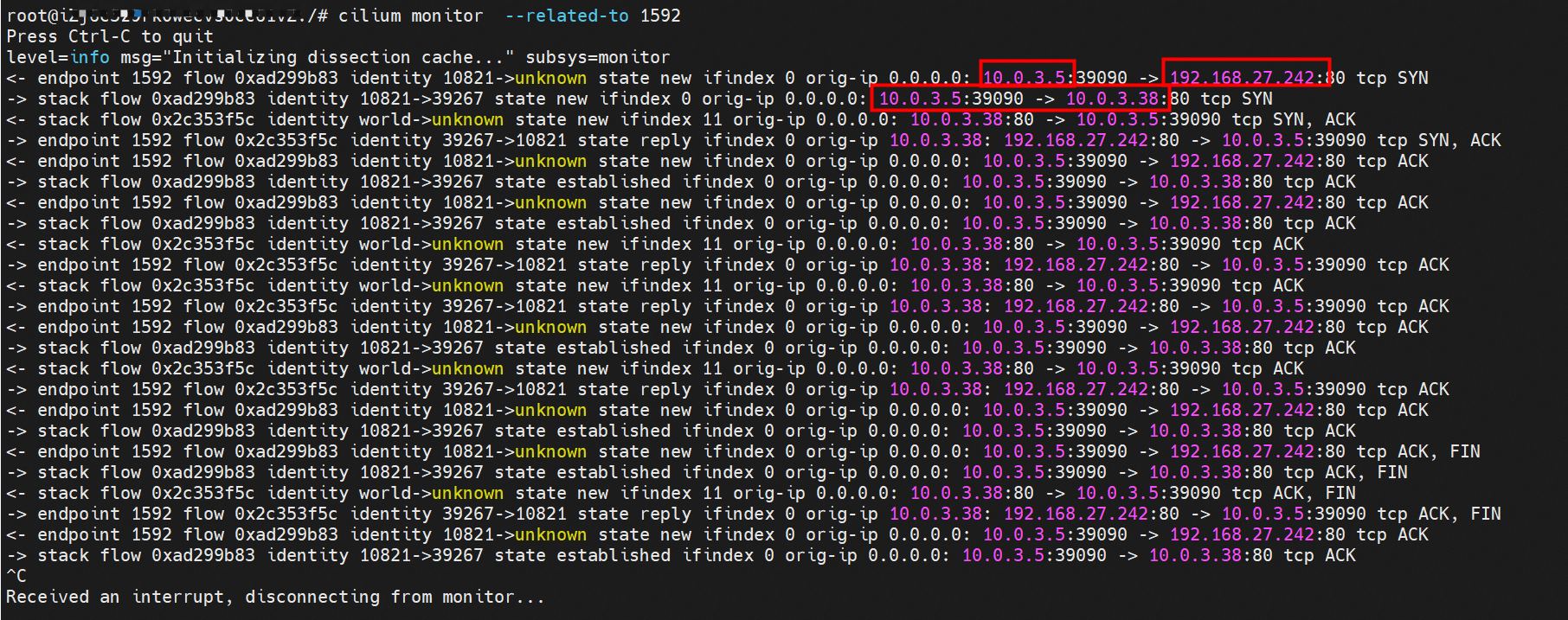

cilium 提供了一个monitor的功能,我们使用cilium monitor --related-to <endpoint ID> , 可以看到,源端POD IP 访问SVCIP 192.168.27.242, 之后被解析到SVC的后端POD IP 10.0.3.38.,说明SVC IP直接在tc层做了转发,这也解释了为什么抓包无法抓到SVC IP,因为抓包是在netdev上抓的,此时已经过了 协议栈和tc。 cilium provides a monitor function, using cilium monitor --related-to & & lt; endpoint ID> see that the source POD IP access SVCIP 19268.27.242, then parsed to SVC backend POD IP 10.3.38, explaining that SVC IP was transmitted directly to the tc layer, which explains why SVC IP could not be captured because the catch was taken on the netdev, which is already past protocol and tc. 后续小节如果涉及SVC IP 的访问,如有类似,不再做详细的抓包展示 The following subsection, if related to SVC IP access, if similar, no more detailed grab pack displays 数据链路转发示意图: Datalink forwarding diagram: 不会经过任何宿主机ECS的网络空间的中间节点 does not pass through the middle of any host ECS cyberspace/span> 整个链路不会和请求不会经过pod所分配的ENI,直接在OS 的ns中命中 Ip rule 被转发到对端pod The whole chain and the request will not pass through the ENI allocated by Pod, and Ip rule will be passed on to the end of the OS /b> 整个请求链路是 ECS1 Pod1 -> ECS1 pod2 (发生在ECS内部), 和IPVS相比,避免了calico网卡设备的两次转发,性能是更好的。 b> the entire request link is ECS1 Pod1-> ECS1 pod2 (occurring within ECS), which avoids two relays of calico netcard devices with better performance than IPVS. ECS1 Pod1 的 eth0网卡无法捕捉到 SVC IP,SVC IP 在 pod 网络命名空间内已经通过ebpf转换成了SVC后端Pod的IP /span

cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz 和 busybox-d55494495-8t677 两个pod, IP分别为 10.0.3.38 和 10.0.3.22 cn-hongkong10.03.15 exists at ninx-7d6877d777-j7dqz and Busybox-d55494495-8t677 two Pods, IP 10.3.38 and 10.3.22, respectively 通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 这两个IP (10.0.3.22 和10.0.3.38)都属于同一个MAC地址00:16:3e:01:b7:bd 和 00:16:3e:04:08:3a ,说明这两个IP属于不同ENI,进而可以推断出nginx-7d6877d777-j7dqz 和 busybox-d55494495-8t677 属于不同ENI 网卡

通过describe svc 可以看到 nginx pod 被加入到了 svc nginx 的后端。 SVC 的CLusterIP是192.168.27.242。如果是集群内访问External IP, 对于 Terway 版本≥ 1.20 来说,集群内访问SVC的ClusterIP或External IP,整个链路架构是一致的,此小节不在针对External IP单独说明,统一用ClusterIP作为示例(Terway版本< 1.20 情况下,访问External IP,会在后续小节说明)。 The entire chain structure of the cluster's access to SVC's ClusterIP was added to the backend of svc nginx via describe svc. The SVC's CLusterIP is 192.168.27.242. If it is an intracluster access to External IP, for Terway version ~ 1.20, the cluster access to SVC's ClusterIP or External IP is consistent, and this section is not a stand-alone description of the External IP, but is used as an example (in the case of Terway < 1.20, access to External IP is described in the following section). busybox-d55494495-8t677 IP地址 10.0.3.22 ,该容器在宿主机表现的PID是2956974,该容器网络命名空间有指向容器eth0的默认路由, 有且只有一条,说明pod访问所有地址都需要通过该默认路由 busybox-d55494495-8t677 IP address 10.3.22 The packaging displays a PID of 2956974 on the host, the container's network naming space has a default route to the container eth0, and there is, and there is only one, indicating that Pod's access to all addresses requires this default route by nginx-7d6877d777-j7dqz IP地址 10.0.3.38 ,该容器在宿主机表现的PID是329470,该容器网络命名空间有指向容器eth0的默认路由 nginx-7d6877d 777-j7dqz IP address 10.3.38, the packaging's performance in the host machine is PID 329470, and the container's network naming space has the default route pointing to packaging eth0 在ACK中,是利用cilium去调用ebpf的能力,可以通过下面的命令可以看到 nginx-7d6877d777-j7dqz 和 busybox-d55494495-8t677 identity ID 分别是 634 和 3681 , respectively 通过busybox-d55494495-8t677 pod,可以找到此pod所在的ECS的Terway pod为terway-eniip-6cfv9,在Terway Pod 中运行下面的cilium bpf lb list | grep -A5 192.168.27.242 命令可以看到 ebpf中对于CLusterIP 192.168.27.242:80 记录的后端是 10.0.3.38:80 。这上述的一切都是通过EBPF 记录到了 源端Pod centos-6c48766848-znkl8 pod 的 tc中。 through Busybox-d55494495-8t677 pod, the port of the Pod can be found at the ECS at Terway Pod as terway-eniip-6cfv9, running the following cilium bpf lb list rep -A5192.1682.2442 command at the end of the ebpf for the CLusterIP 192168.272:80 record is 10.3.38:80. All of the above is recorded in the source tc from the EBPF. 这里不再过多对于svc ClusterIP的ebpf转发进行描述,详细信息可以参考 2.5 小节中的描述,从上述描述情况,可以得知被访问的SVC 的IP 在 客户端 busybox 的网络命名空间中已经被ebpf转为svc 的后端pod 的IP,在任何dev上都无法捕获到客户端访问的SVC的IP。 故此场景和 2.3 小节的网络架构非常类似,只是在客户端内会由cilium ebpf转发的动作 This is no longer too much to describe the ebpf transmission of svc clusterIP. Detailed information can be found in the description in subsection 2.5. From this description, it can be seen that the IP of the SVC visited has been converted from ebpf to svc's back-end Pod IP on the client's web naming space. This scene is very similar to the network structure of subsection 2.3, except for actions that will be transmitted by cilium ebpf on the client's end 数据链路转发示意图: Datalink forwarding diagram: 不会经过任何宿主机ECS的网络空间的中间节点 does not pass through the middle of any host ECS cyberspace/span> 整个链路是需要从客户端pod所属的ENI网卡出ECS再从目的POD所属的ENI网卡进入ECS the entire link is to get ECS out of the ENI card belonging to the client Pod and then enter the ENI card belonging to the target POD into ECS 整个请求链路是 ECS1 POD1 -> ECS1 eth1 -> VPC -> ECS1 eth2 -> ECS1 POD2 the entire request link is ECS1 PD1 - > ECS1 eth1 - > VPC - > ECS1 eth2 - > ECS1 在客户端/服务端Pod内或者ECS的ENI网卡都 无法捕捉到 SVC IP,SVC IP 在 客户端 pod 网络命名空间内已经通过ebpf转换成了SVC后端Pod的IP b> has been converted to the SVC backend Pod by ebpf or the ENI web card of the ECS

cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz, IP分为 10.0.3.38 cn-hongkong10.03.15 exists at ginx-7d6877d777-j7dqz, IP divided into 10.3.38 cn-hongkong.10.0.3.93 节点上存在 centos-6c48766848-dz8hz, IP分为 10.0.3.127 cn-hongkong.10.3.93 Exists at Centos-6c48766848-dz8hz, IP divided into 10.3.127 通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 nginx-7d6877d777-j7dqz IP 10.0.3.5 属于cn-hongkong.10.0.3.15 上的 MAC地址 为00:16:3e:04:08:3a 的ENI网卡 Through this node, terway-cli show factory commands we can see nginx-7d6877d777-j7dqz IP10.3.5 belonging to

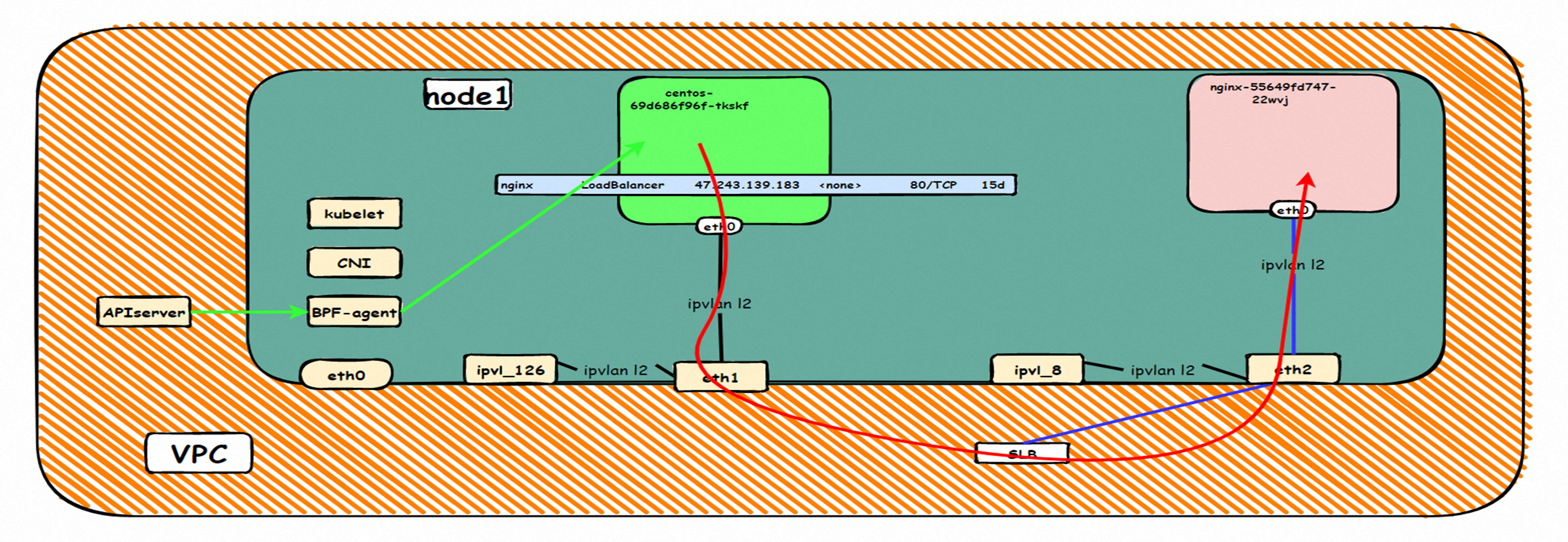

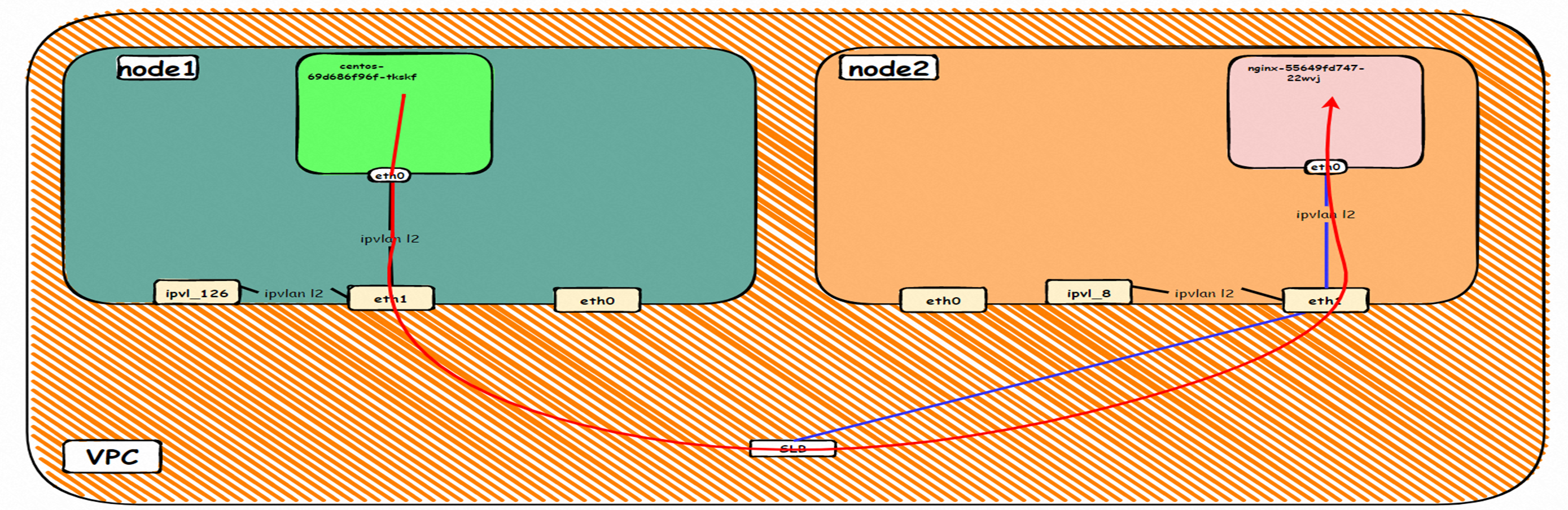

通过 此节点的terway pod, 我们可以 利用 terway-cli show factory 的命令看到 centos-6c48766848-dz8hz IP 10.0.3.127 属于cn-hongkong.10.0.3.93 上的 MAC地址 为 00:16:3e:02:20:f5 的ENI网卡 Through this node, terway-cli show factory commands we can see centos-6c48766848-dz8hz IPcn-hongg.10.3.93 at 00:16:3e:02:f5) 通过describe svc 可以看到 nginx pod 被加入到了 svc nginx 的后端。 SVC 的CLusterIP是192.168.27.242。如果是集群内访问External IP, 对于 Terway 版本≥ 1.20 来说,集群内访问SVC的ClusterIP或External IP,整个链路架构是一致的,此小节不在针对External IP单独说明,统一用ClusterIP作为示例(Terway版本< 1.20 情况下,访问External IP,会在后续小节说明)。 The entire chain structure of the cluster's access to SVC's ClusterIP was added to the backend of svc nginx via describe svc. The SVC's CLusterIP is 192.168.27.242. If it is an intracluster access to External IP, for Terway version ~ 1.20, the cluster access to SVC's ClusterIP or External IP is consistent, and this section is not a stand-alone description of the External IP, but is used as an example (in the case of Terway < 1.20, access to External IP is described in the following section). Pod访问SVC 的Cluster IP,而SVC的后端Pod 和 客户端Pod部署在不同ECS上,此架构类似 2.4 小节中的不同ECS节点上的Pod间互访情况,只不此场景是Pod访问SVC 的ClusterIP,要注意此处是访问ClusterIP,如果是访问External IP,那么场景会进一步复杂了,本门的后面几个小节会详细说明。 对于 ClusterIP的ebpf转发进行描述,详细信息可以参考 2.5 小节中的描述,和前面几个小节一样,在任何dev上都无法捕获到客户端访问的SVC的IP。 Pod access to SVC's Cluster IP, while SVC's backend Pod and client Pod are deployed on different ECSs. This structure is similar to the inter-Pod exchange on different ECS nodes in 2.4 subsections, except for Pod's access to SVC's Cluster IP, which, as in previous sections, cannot be captured on any Dev. 数据链路转发示意图: Datalink forwarding diagram: 不会经过任何宿主机ECS的网络空间的中间节点 does not pass through the middle of any host ECS cyberspace/span> 整个链路是需要从客户端pod所属的ENI网卡出ECS再从目的POD所属的ENI网卡进入ECS the entire link is to get ECS out of the ENI card belonging to the client Pod and then enter the ENI card belonging to the target POD into ECS 整个请求链路是 ECS1 POD1 -> ECS1 eth1 -> VPC -> ECS2 eth1 -> ECS2 POD2 the entire request link is ECS1 PD1-> ECS1 eth1-> VPC-> ECS2 eth1-> ECS2 在客户端/服务端Pod内或者ECS的ENI网卡都 无法捕捉到 SVC IP,SVC IP 在 客户端 pod 网络命名空间内已经通过ebpf转换成了SVC后端Pod的IP b> has been converted to the SVC backend Pod by ebpf or the ENI web card of the ECS

请参考2.5 小节。 Please refer to sub-section 2.5. 由于客户端Pod和被访问的SVC的后端Pod同属于同一个ENI,那么在terway 版本小于1.2.0 的情况下,访问External IP,实际上数据链路会通过ENI出ECS到External IP 的SLB,在被转发到同一个ENI上。四层SLB目前是不支持同一个EI同时作为客户端和服务端,所以会形成回环,详细信息可以参考下面连接: As the client port Pod and the back-end of the interviewed SVC Pod belong to the same ENI, access to the External IP in a version smaller than 1.2.0 and the data link will actually go out of the ENI ECS to the External IP SLB, which is forwarded to the same ENI. The four-storey SLB does not currently support the same EI as a client and a service provider, and the detailed information can be referred to as the connection below: https://help.aliyun.com/document_detail/97467.html#section-krj-kqf-14s https://help.aliyun.com/document_detail/55206.htm 数据链路转发示意图: Datalink forwarding diagram: 整个请求链路是 ECS1 POD1 -> ECS1 eth1 -> VPC -> SLB -> 中断 the entire request link is ECS1 PD1-> ECS1 eth1-> VPC-> SLB-> interrupted Terway版本小于1.2.0时,集群内访问external IP,会出ECS ENI到SLB,再由SLB转发到ECS ENI上,如果源pod所属的ENI和被转发的ENI为同一个,将访问不成功,原因是四层SLB会形成回环 b>Terway version less than 1.2.0, visits to external IPs within clusters will result in ECS ENI to SLB, which will then be forwarded to ECS ENI by SLB, and if the ENI belonging to source Pod and the transmitted ENI are the same, the visit will not be successful because the four-storey SLB will form loops 解决方案(任何一个): Solution(any): 通过SVC annotation将SLB配置为7层监听 Configure SLB into 7 floor listening through SVC operation 将Terway版本升级之1.2.0以及上,并开启集群内负载均衡。Kube-Proxy会短路集群内访问ExternalIP、LoadBalancer的流量,即集群内访问这些外部地址,实际流量不会到外部,而会被转为对应后端的Endpoint直接访问。在Terway IPvlan模式下,Pod访问这些地址流量由Cilium而不是kube-proxy进行处理, 在Terway v1.2.0之前版本并不支持这种链路的短路。在Terway v1.2.0版本发布后,新建集群将默认开启该功能,已创建的集群不会开启。(此处就是 2.5小节场景) Upgrades the Terway version by 1.2.0 and opens up the load balance within the cluster. Kube-Proxy visits the ExternalIP, LoadBaalancer flow within the cluster, i.e., the external address within the cluster will not be visited externally, but will be turned into a backend Endpoint direct access. In the Terway IPvlan mode, Pod access to these address traffics is handled by Cilium instead of kube-proxy, which does not support the shortness of the link until the Terway v1.2. https://help.aliyun.com/document_detail/197320.htm https://help.aliyun.com/document_detail/113090.html#p-lim-520-w07 此处环境和 2.6小节 情况类似,不做过多描述,只是此小节是在terway 版本小于1.2.0 情况下,访问External IP 47.243.139.183 Environment and sub-section 2.6. The situation is similar and is not described, except that this sub-section is visited in cases where the version of the terway is less than 1.2.0 请参考2.6 和 2.8 小节。

由于客户端Pod和被访问的SVC的后端Pod虽然同属于同一个ECS,但是不属于同一个ENI,那么在terway 版本小于1.2.0 的情况下,访问External IP,实际上数据链路会通过客户端pod ENI出ECS到External IP 的SLB,在被转发到另一个一个ENI上。虽然从外部感知上看 两个客户端Pod和SVC的后端Pod都是在同一个ECS,但是由于属于不同ENI,所以不会形成回环,可以访问成功,此处的结果和2.8 小节完全不同,需要注意。 As both the client Pod and the visited SVC backend Pod belong to the same ECS but do not belong to the same ENI, access to the External IP in the case of the terway version less than 1.2.0, the data link would actually be passed out of the ECS to the External IP through the client Pod ENI and forwarded to another ENI. Note is needed, although external perception suggests that both the client port Pod and SVC backends Pod are in the same ECS, but because they belong to different ENIs, do not form loops, can be accessed successfully, the results are very different from subsection 2.8. 数据链路转发示意图: Datalink forwarding diagram: 整个请求链路是 ECS1 POD1 -> ECS1 eth1 -> VPC -> SLB -> ECS1 eth2 -> ECS1 POD2 the entire request link is ECS1 PD1-> ECS1 eth1-> VPC-> SLB-> ECS1 eth2-> ECS1 PD2 Terway版本小于1.2.0时,集群内访问external IP,会出ECS ENI到SLB,再由SLB转发到ECS ENI上,如果源pod所属的ENI和被转发的ENI为同一个,将访问不成功,原因是四层SLB会形成回环 b>Terway version less than 1.2.0, visits to external IPs within clusters will result in ECS ENI to SLB, which will then be forwarded to ECS ENI by SLB, and if the ENI belonging to source Pod and the transmitted ENI are the same, the visit will not be successful because the four-storey SLB will form loops 如果源pod所属的ENI和被转发的ENI为是同一个节点上的不同ENI,可以访问成功 b>if the source Pod belongs to ENI and the transmitted ENI are different ENIs on the same node, access to success 此处环境和 2.6小节 情况类似,不做过多描述,只是此小节是在terway 版本小于1.2.0 情况下,访问External IP 47.243.139.183 Environment and sub-section 2.6. The situation is similar and is not described, except that this sub-section is visited in cases where the version of the terway is less than 1.2.0 请参考2.7 和 2.9 小节。

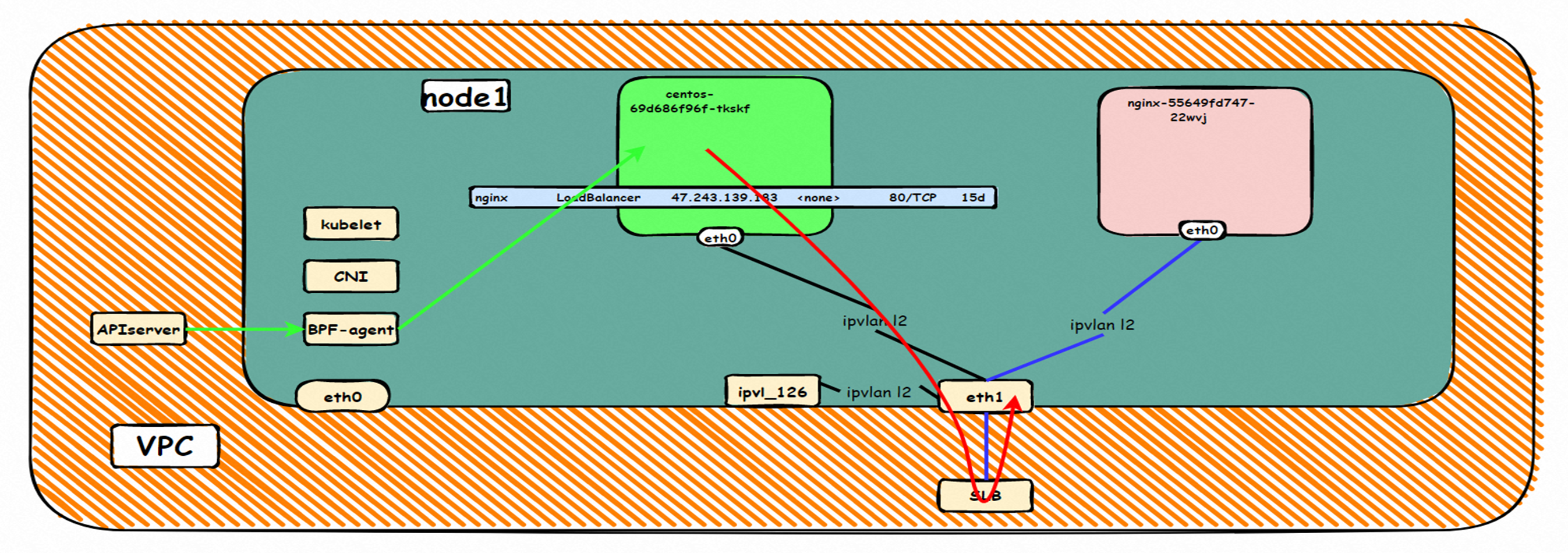

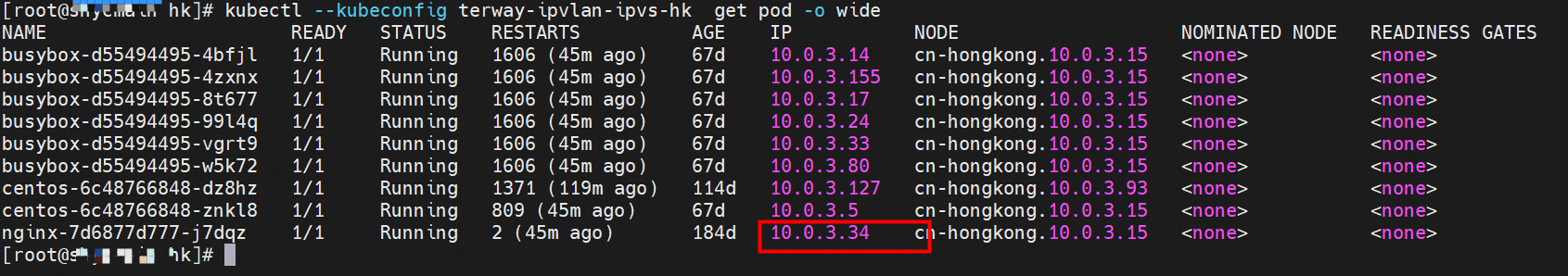

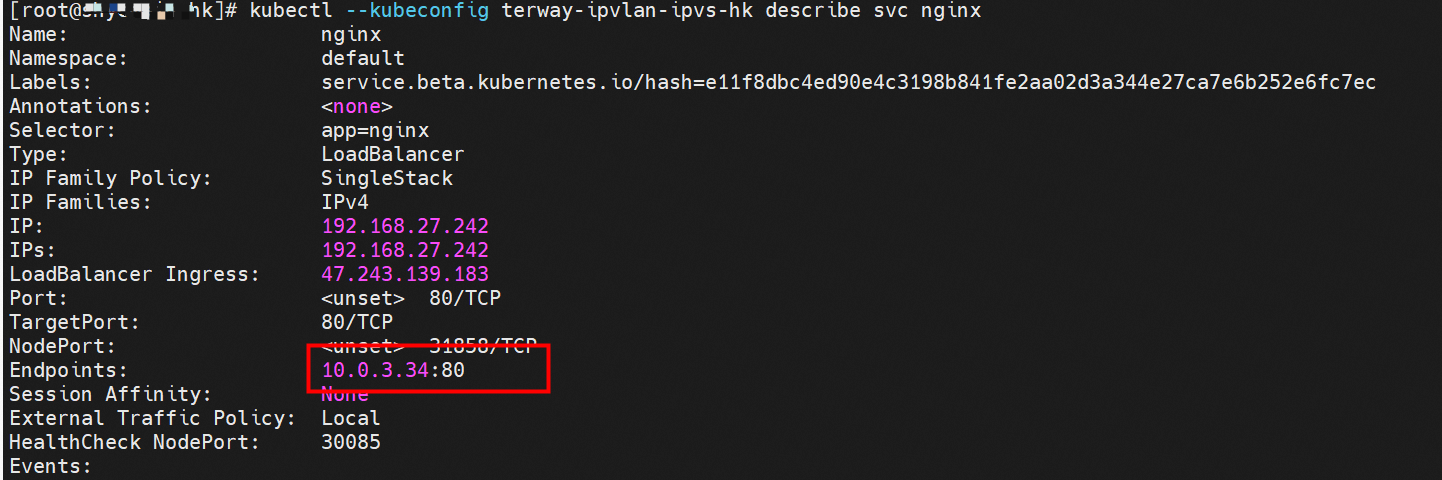

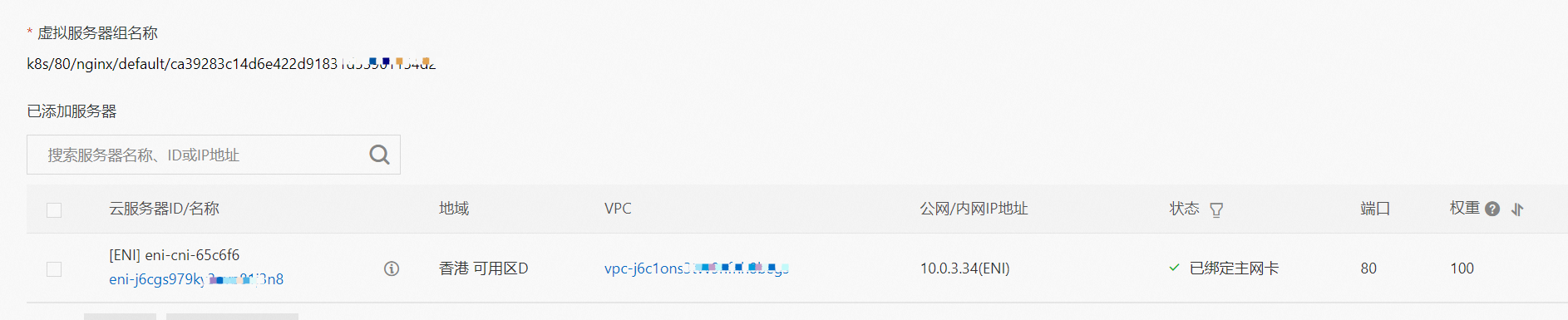

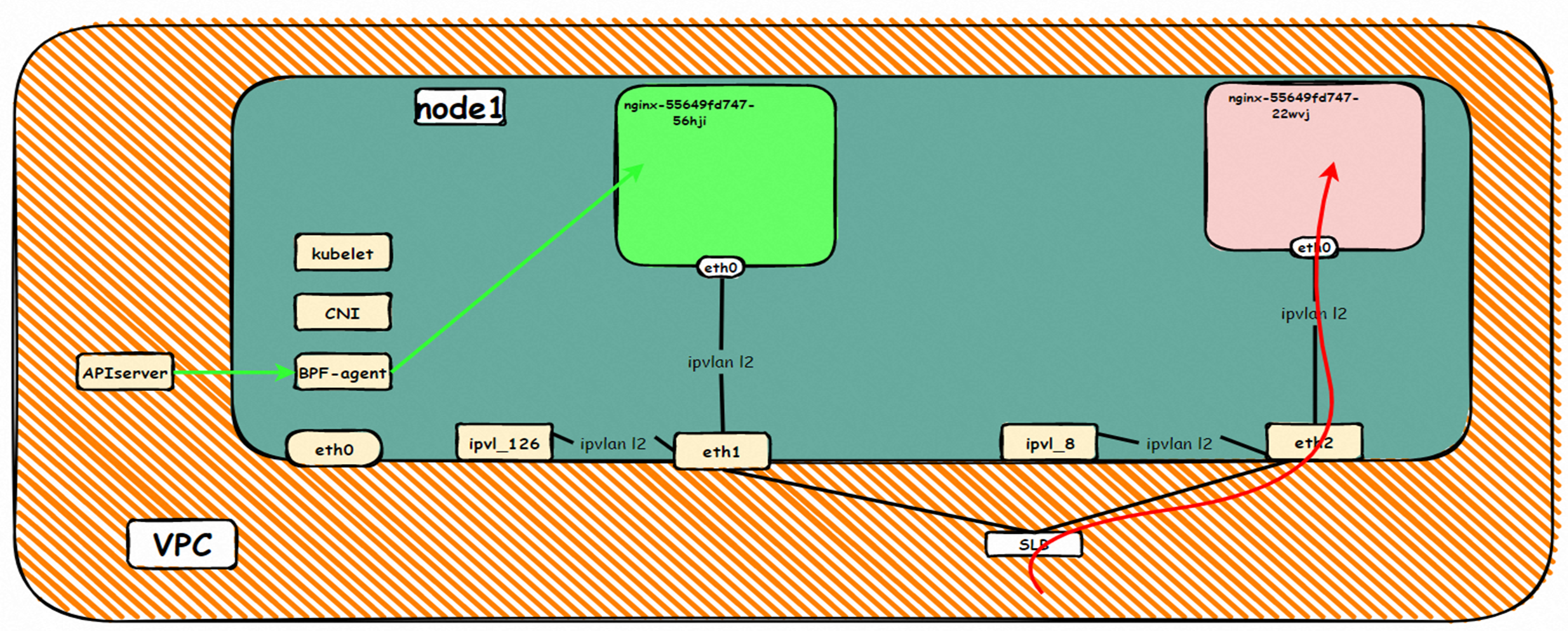

此处和2.7的架构场景相似,都是客户端Pod和SVC的后端Pod不属于不同的ECS节点, 客户端去访问SVC 的External IP。只有Terway 的版本不同,2.7小节 Terway 版本是≥1.2.0,此小节是<1.2.0,仅仅是Terway 版本和eniconfig的不同,两者访问链路一个会经过SLB,一个则不会经过SLB,这一点需要关注。置于不同的原因是因为1.2.0 Terway 版本之后开启集群内负载均衡,对于访问ExternalIP 会被负载到Service网段,具体信息请见 2.8 小节。 opens the load balance in the cluster, and for access to ExternalIP, it will be carried to the web section of Service, for specific information, see subsection 2.8. 数据链路转发示意图: Datalink forwarding diagram: 整个请求链路是 ECS1 POD1 -> ECS1 eth1 -> VPC -> SLB -> ECS2 eth1 -> ECS2 POD2 the entire request link is ECS1 PD1 - > ECS1 eth1 - > VPC - > SLB - > ECS2 eth1 - > ECS2 PD2 Terway版本小于1.2.0时,集群内访问external IP,会出ECS ENI到SLB,再由SLB转发到ECS ENI上,如果源pod所属的ENI和被转发的ENI为同一个,将访问不成功,原因是四层SLB会形成回环 b>Terway version less than 1.2.0, visits to external IPs within clusters will result in ECS ENI to SLB, which will then be forwarded to ECS ENI by SLB, and if the ENI belonging to source Pod and the transmitted ENI are the same, the visit will not be successful because the four-storey SLB will form loops cn-hongkong.10.0.3.15 节点上存在 nginx-7d6877d777-j7dqz, IP分为 10.0.3.34 cn-hongkong10.03.15 exists at ginx-7d6877d777-j7dqz, IP divided into 10.3.34 通过describe svc 可以看到 nginx pod 被加入到了 svc nginx 的后端。 SVC 的CLusterIP是192.168.27.242。 Through describe svc, nginx pod is added to the backend of svc nginx. The CLusterIP for SVC is 192.168.27.242. 在SLB控制台,可以看到 lb-j6cj07oi6uc705nsc1q4m 虚拟服务器组的后端服务器组是两个后端nginx pod 的的ENI eni-j6cgs979ky3evp81j3n8 on the SLB Console, you can see that the backend server group of the lib-j6cj07oi6uc705nsc1q4m virtual server group is ENI eni-j6cgs979ky3evp81j3n8 of the two backends nginx pod 从集群外部角度看,SLB的后端虚拟服务器组是SVC的后端Pod所属的ENI网卡,内网的IP 地址就是Pod的地址 From the external point of view of the cluster, the SLB backend virtual server group is the ENI net card belonging to the SVC backend Pod, and the IP address of the intranet is the address of the Pod. 数据链路转发示意图: Datalink forwarding diagram: ExternalTrafficPolicy 为Local或Cluster模式下, SLB只会将 pod分配的ENI挂在到SLB的虚拟服务器组 b>ExternalTrafficPolicy for Local or Cluster mode, SLB only hangs the pod assigned ENI on SLB's virtual server group 数据链路:client -> SLB -> Pod ENI + Pod Port -> ECS1 Pod1 eth0 利用EBPF和IPVLAN隧道,避免了数据链路在ECS OS内核协议栈的转发,这必然带来了更高的性能,同时也有ENIIP模式一样的多IP共享ENI的方式来保证Pod密度。但是随着业务场景越来越趋于复杂,业务的ACL管控也更加趋于Pod维度去管理,比如需要针对Pod维度进行安全ACL规则设置的需求等。 下一系列我们将进入到Terway ENI-Trunking模式的全景解析——《全景剖析阿里云容器网络数据链路(五)—— Terway ENI-Trunking》。 The main focus of this article is the ACK, in the Terway IPVLAN+EBPF model, which can be divided into 11 SOP scenes, the transmission path of the data chain under the SOP landscape. The demand for the performance of the client has increased Pod density in the Terway IPVLAN+EBPF compared to Terway ENI; the higher performance compared to the Terway ENIIP, but therefore also the complexity and observability of the web links, which can be divided into 11 SOP scenes, and the transmission of the 11 scenes into "synthesis", technology and synthesis of the "synthesis" of the cloud IPVLAN structure, which provides an initial direction for us to meet the "strew, optimized" of the links under the Terra IPVLAN structure,

注册有任何问题请添加 微信:MVIP619 拉你进入群

打开微信扫一扫

添加客服

进入交流群

发表评论